- Spring Boot动态设置定时任务

- 【MySQL】MySQL表的操作-创建查看删除和修改

- Django提示mysql版本过低:django.db.utils.N

- 【Java】Spring MVC程序开发

- nginx访问地址重定向路径局部替换

- 基于Java+Swing+mysql实现图书借阅管理系统--期末大作业

- 如何开启本地的mysql

- Apache Doris (四) :Doris分布式部署(一) FE部

- Linux安装mysql8.0(官方教程!)

- 饥荒服务器面板 dst-admin-go 版本安装

- SpringBoot整合POI实现Excel文件读写操作

- 凝思6.0.80系统离线环境安装Nginx,Redis,Postgre

- go mod tidy 报错:x509: certificate si

- Springboot获取jar包中resources资源目录下的文件

- 在线 SQL 模拟器SQL Fiddle使用简介

- SQL:查询结果升序、降序排列

- Spring—事务及事务的传播机制

- nginx报错(error while loading shared

- IDEA 开发一个简单的 web service 项目,并打包部署到

- SpringBoot导出Excel的四种方式

- 代码优雅升级,提升开发效率:挖掘Spring AOP配置的学习宝藏!

- 【跟小嘉学 Rust 编程】三十三、Rust的Web开发框架之一: A

- 【MySQL】MySQL的数据类型

- SpringBoot动态导出word文档(完美实整教程 复制即可使用,

- 基于 SpringBoot3 仿牛客论坛项目代码及踩坑总结

- Mysql - 日志

- 忘记密码不用愁【linux下 MySQL数据库忘记密码解决方案】

- Apache atlas 元数据管理治理平台使用和架构

- acme.sh自动配置免费SSL泛域名证书并续期(Aliyun + D

- 【Java】手把手教你写学生信息管理系统(窗口化+MYSQL)

🦄 个人主页——🎐开着拖拉机回家_Linux,大数据运维-CSDN博客 🎐✨🍁

🪁🍁🪁🍁🪁🍁🪁🍁 🪁🍁🪁🍁🪁🍁🪁 🪁🍁🪁🍁🪁🍁🪁🍁🪁🍁🪁🍁

感谢点赞和关注 ,每天进步一点点!加油!

目录

一、版本信息

1.1大数据组件版本

1.2Apache Components

1.3Databases支持版本

二、安装包上传

三 、服务器基础环境配置

3.1配置修改

3.2服务器环境配置

3.3MySQL数据库安装

四、安装Ambari-server

4.1 安装ambari-server

4.2 检查REPO源

五、HDP 安装

Get Started

Select Version

Install Options

Confirm Hosts

Choose Services

Assign Masters

Assign Slaves and Clients

Customize Services

CREDENTIALS

DATABASES

DIRECTORIES

ALL CONFIGURATIONS

Review

六、开启Kerberos

6.1 kerberos服务检查

6.2 Ambari启动kerberos

Get Started

Configure Kerberos

Install and Test Kerberos Client

Configure Identities

Confirm Configuration

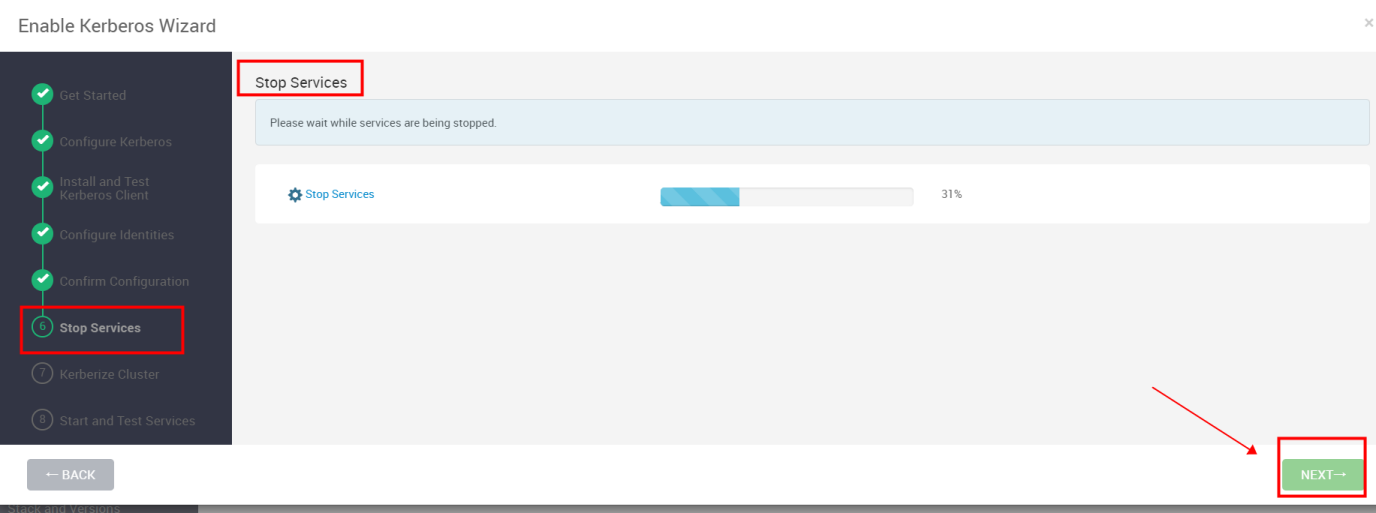

Stop Services

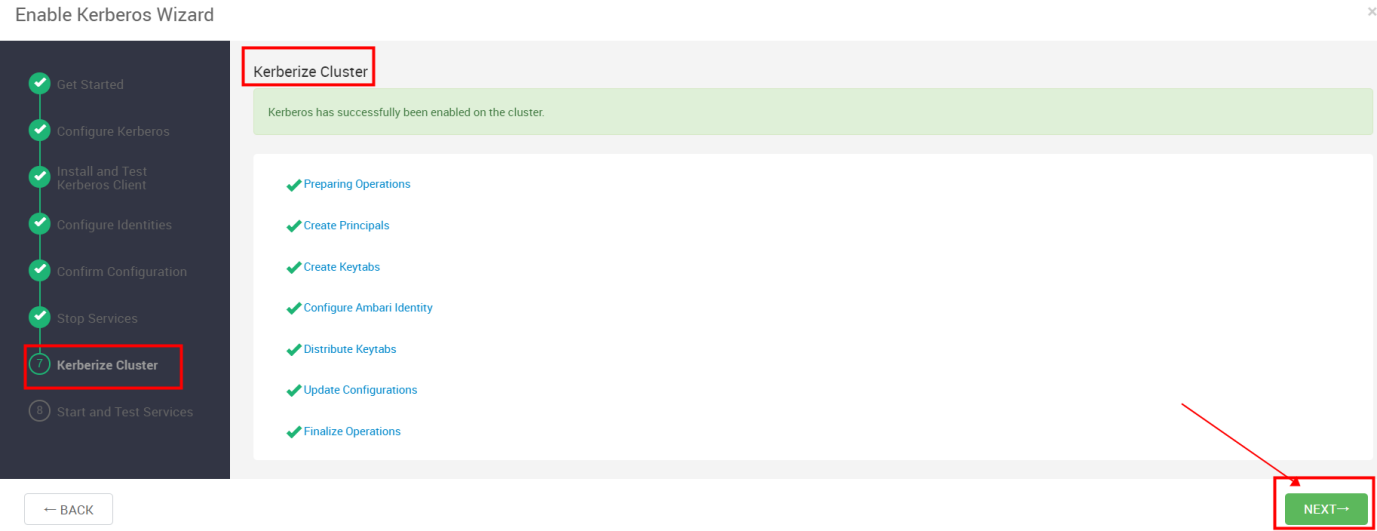

Kerberize Cluster

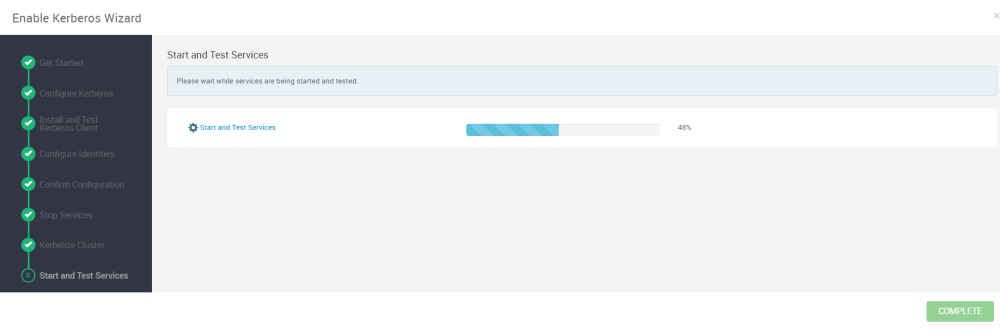

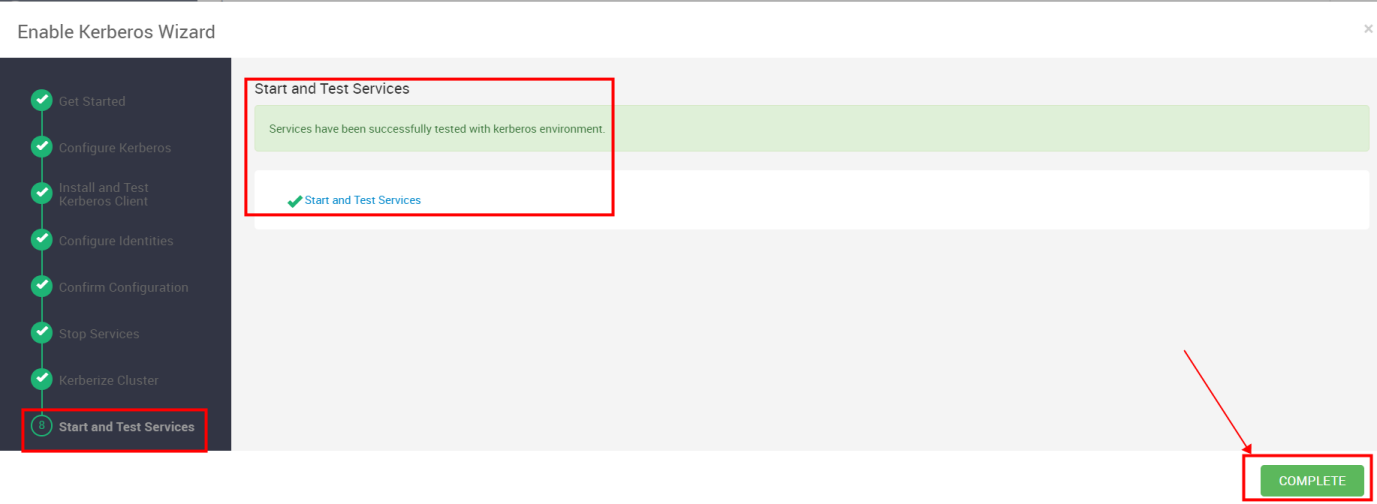

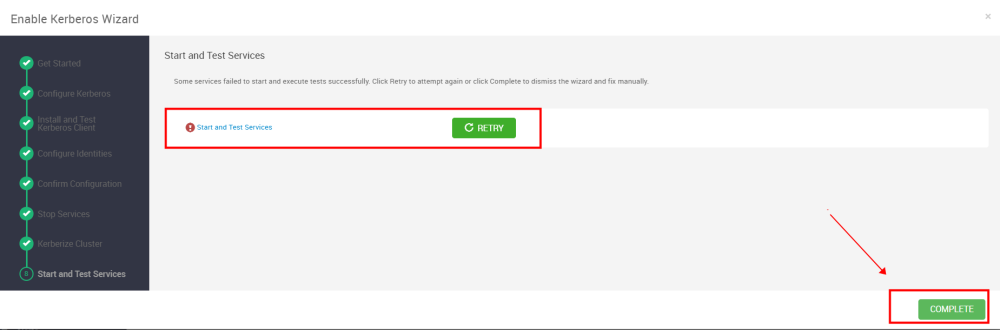

Start and Test Services

七、脚本附录说明

一、版本信息

1.1大数据组件版本

| 组件 | 版本 |

| 操作系统 | CentOS7.2-7.9 |

| ambari | 2.7.4 |

| HDP | 3.3.1.0 |

| HDP-GPL | 3.3.1.0 |

| HDP-UTILS | 1.1.0.22 |

| JDK | jdk-8u162-linux-x64.tar.gz |

| MySQL | 5.7 |

1.2Apache Components

| 组件名称 | Apache版本 |

| Apache Ambari | 2.7.4 |

| Apache Zookeeper | 3.4.6 |

| Apache Hadoop | 3.1.1 |

| Apache Hive | 3.1.0 |

| Apache HBase | 2.0.2 |

| Apache Ranger | 1.2.0.3.1 |

| Apache Spark 2 | 2.3.0 |

| Apache TEZ | 0.9.1 |

1.3Databases支持版本

| Name | Version |

| PostgreSQL | 10.7 10.5 10.2 9.6 |

| MySQL | 5.7 |

| MariaDB | 10.2 |

二、安装包上传

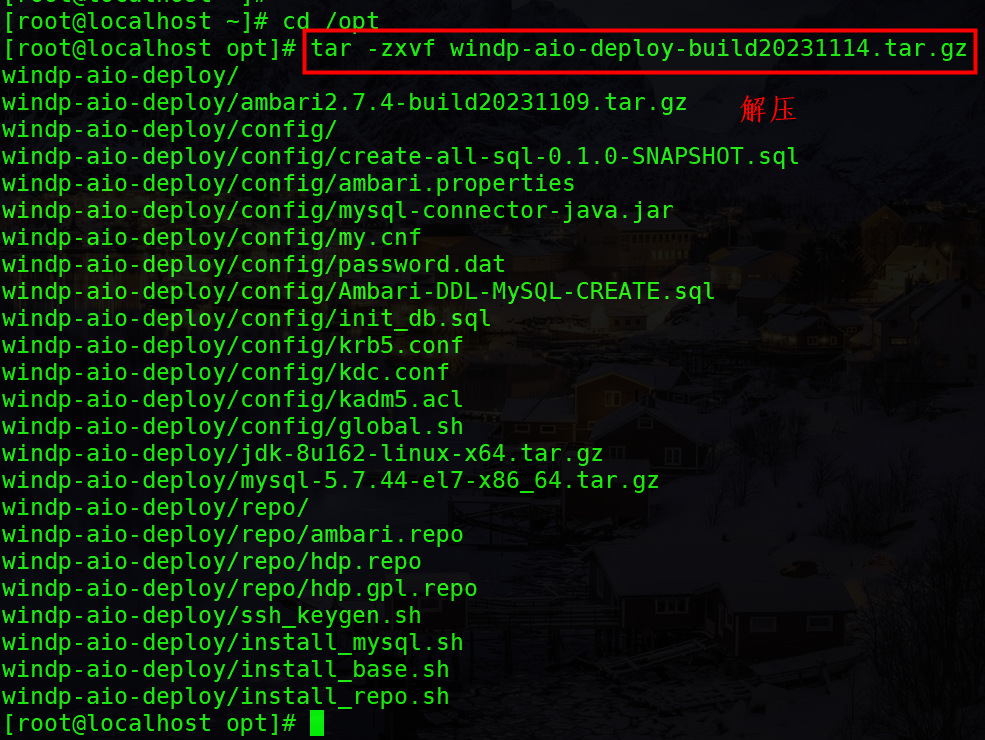

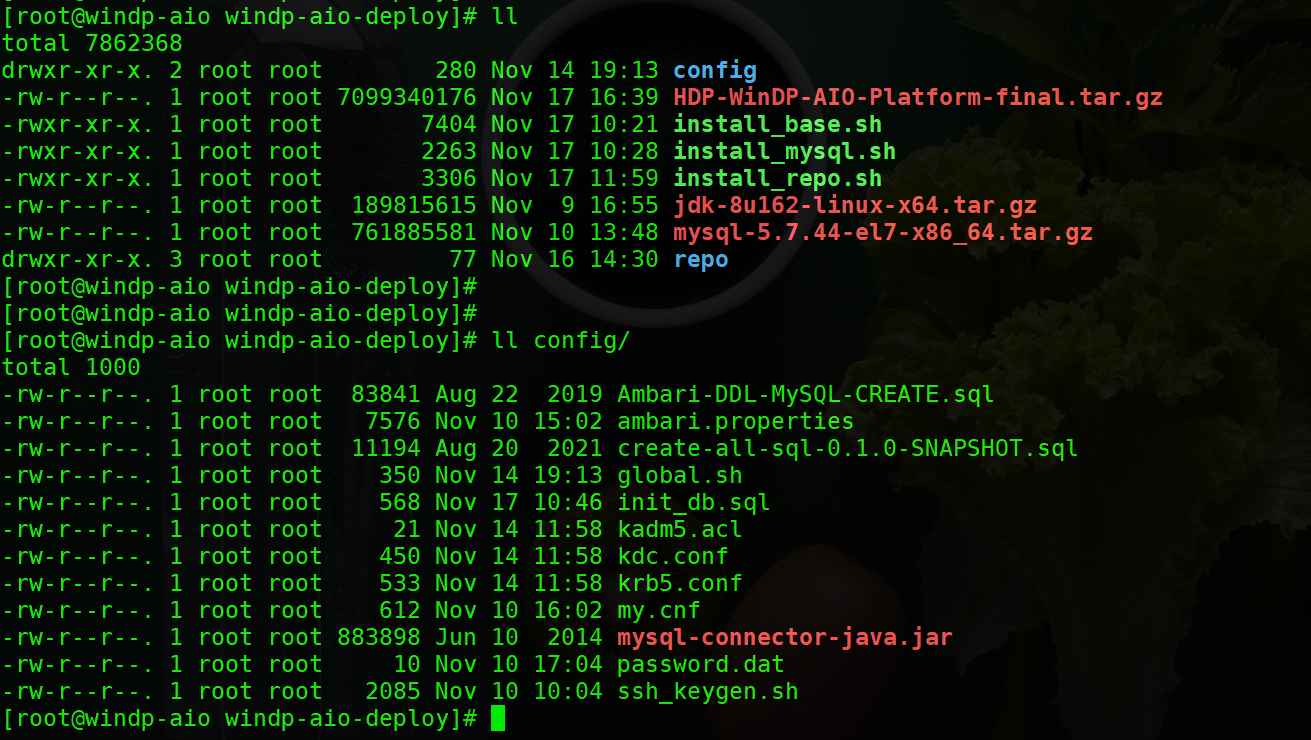

使用工具将安装包上传到Linux服务器,如上传到/opt 目录,如下命令进入/opt目录并解压安装包,包名日期可能有所变动。

cd /opt tar -zxvf windp-aio-deploy-build20231114.tar.gz cd /opt/windp-aio-deploy

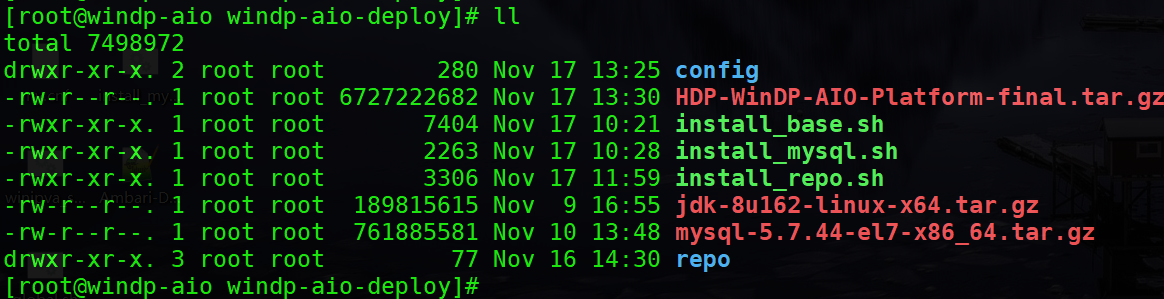

安装包解压后目录结构:

windp-aio-deploy-buildxxxxxxxx.tar.gz 包内容说明

| 包、脚本和目录 | 说明 |

| HDP-WinDP-AIO-Platform-final.tar.gz | Ambari安装包 |

| jdk-8u162-linux-x64.tar.gz | JDK安装包 |

| mysql-5.7.44-el7-x86_64.tar.gz | MySQL安装包 |

| install_base.sh | 基础环境配置和初始化 |

| install_mysql.sh | MySQL安装 |

| install_repo.sh | HDP源配置和Ambari安装 |

| config | 配置文件 |

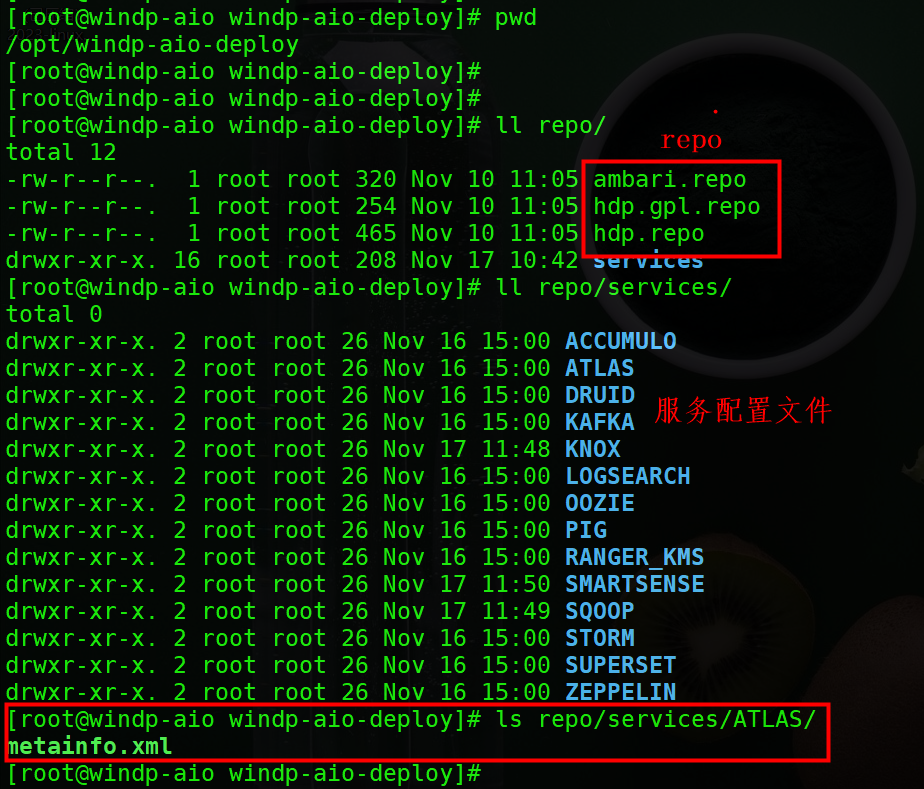

| repo | HDP-repo源文件 |

三 、服务器基础环境配置

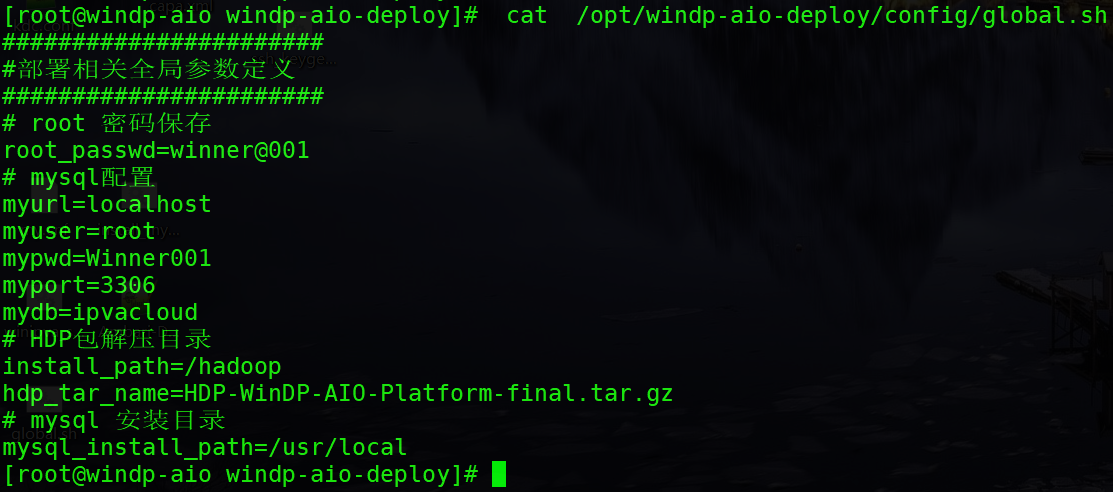

3.1配置修改

确认是否/opt为安装目录,如果不是则要修改路径,root 用户密码修改,需要修改部分已标红,修改完成后在Linux服务器命令行执行如下命令:

cat > /opt/windp-aio-deploy/config/global.sh << EOF ####################### #部署相关全局参数定义 ####################### # root 密码保存 root_passwd=winner # mysql配置 myurl=127.0.0.1 myuser=root mypwd=Winner001 myport=3306 mydb=ipvacloud # HDP包解压目录 install_path=/hadoop hdp_tar_name=HDP-WinDP-AIO-Platform-final.tar.gz # mysql 安装目录 mysql_install_path=/usr/local EOF

执行如下命令,检查文件是否保存成功。

cat /opt/windp-aio-deploy/config/global.sh

3.2服务器环境配置

执行如下命令,等待执行完成

cd /opt/windp-aio-deploy/ sh install_base.sh

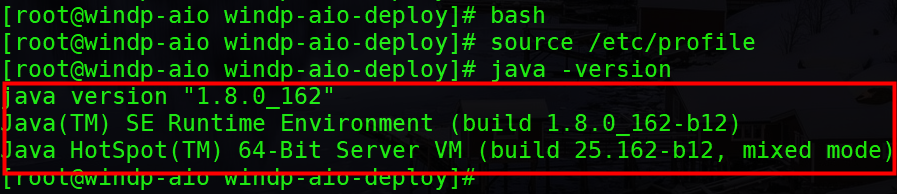

执行如下命令,查看JDK是否配置成功,显示如图JJDK版本信息则配置成功。

bash source /etc/profile java -version

3.3MySQL数据库安装

执行如下命令,等待MySQL安装完成:【Linux】Centos7 shell实现MySQL5.7 tar 一键安装-CSDN博客

cd /opt/windp-aio-deploy/ sh install_mysql.sh

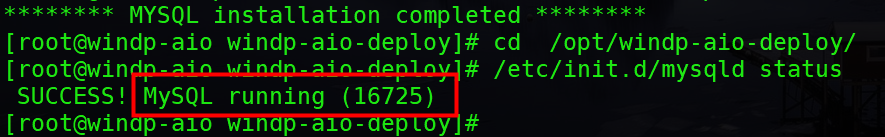

执行如下命令,查看MySQL是否启动成功,显示如图“running”则表示MySQL启动成功。

cd /opt/windp-aio-deploy/ /etc/init.d/mysqld status

四、安装Ambari-server

4.1 安装ambari-server

执行如下命令,等待Ambari-Server 配置启动完成,需要等待10分钟左右。

cd /opt/windp-aio-deploy/ sh install_repo.sh

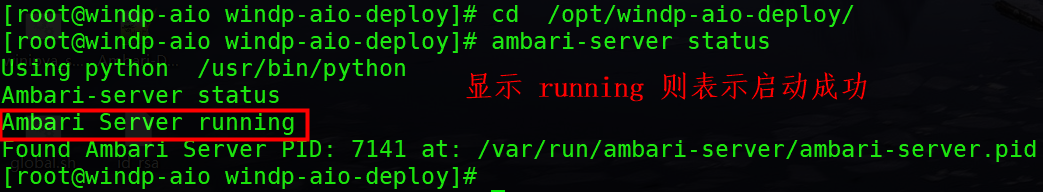

执行如下命令,查看Ambari-Server是否启动成功,显示如图“running”则表示启动成功。

ambari-server status

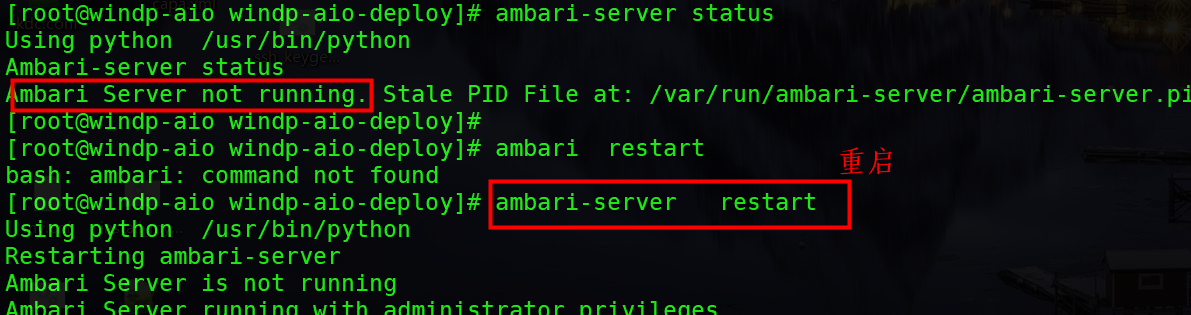

如果Ambari-Server没有启动成功,执行如下命令尝试重启。

ambari-server restart

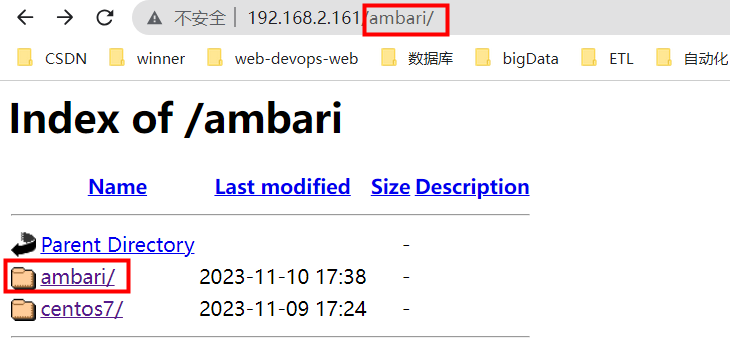

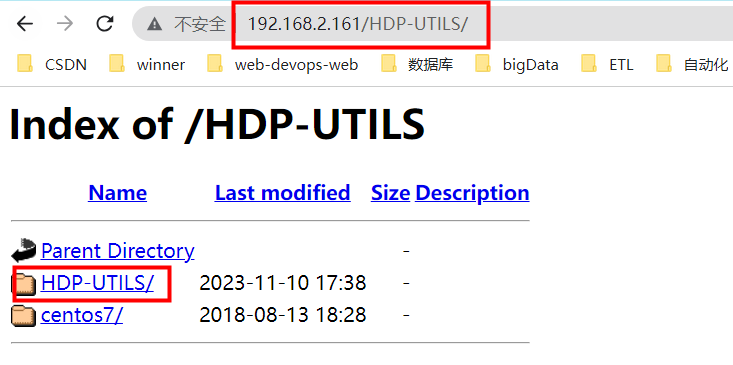

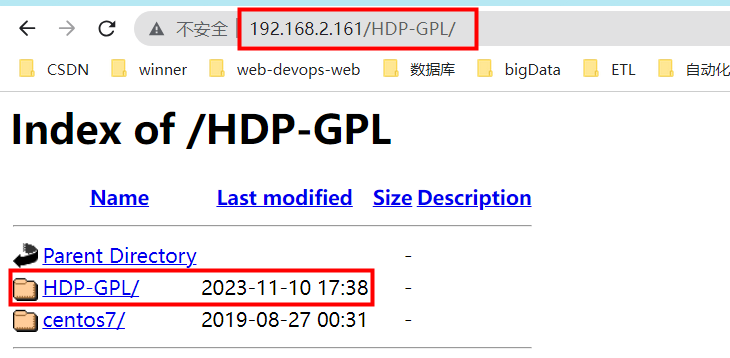

4.2 检查REPO源

执行完上一步脚本配置的REPO源可以在浏览器中查看。需要将示例IP地址换成部署WinDP-AIO Linux本机的IP。如下是示例IP地址:

http://192.168.2.161/ambari/ http://192.168.2.161/HDP/ http://192.168.2.161/HDP-UTILS/ http://192.168.2.161/HDP-GPL/

注意:如果某个地址从浏览器访问失败则说明配置的基础环境有问题,需要检查基础环境的配置。

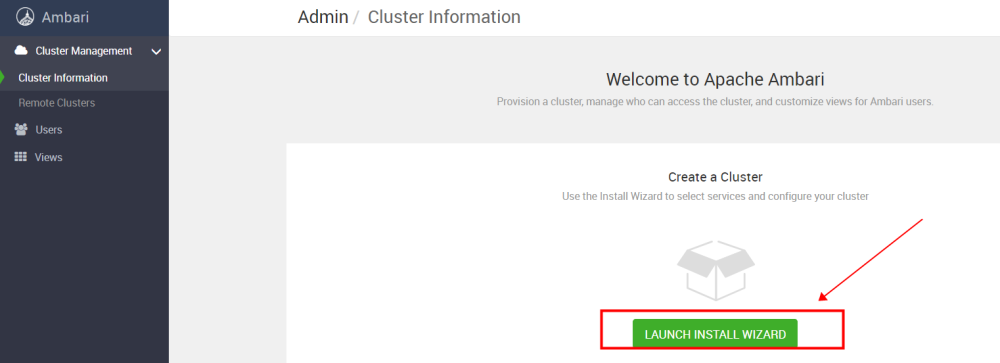

五、HDP 安装

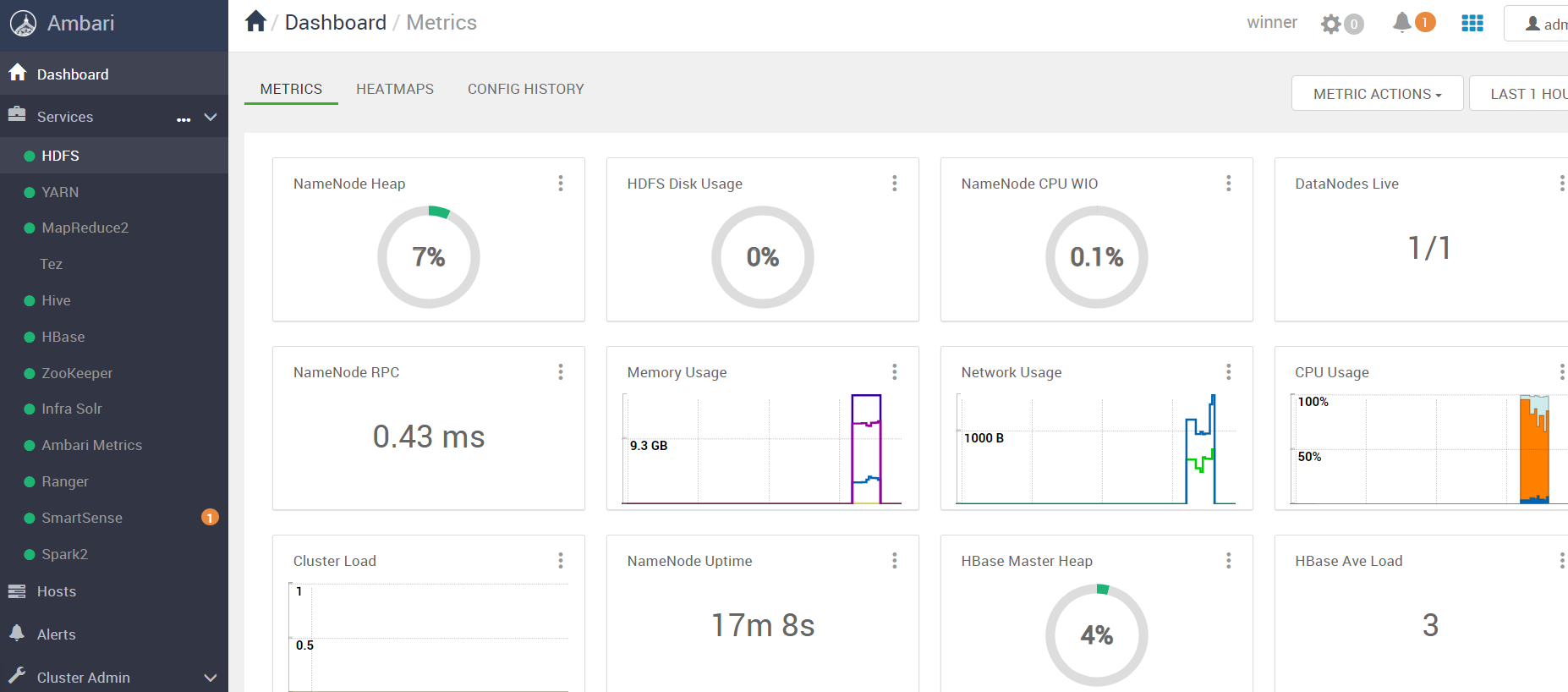

登录Ambari-Server, 地址为IP:8080,示例地址:http://192.168.2.161:8080/,账号密码默认:admin。

第一次登录进去的默认界面如下图所示,点击红框按钮。

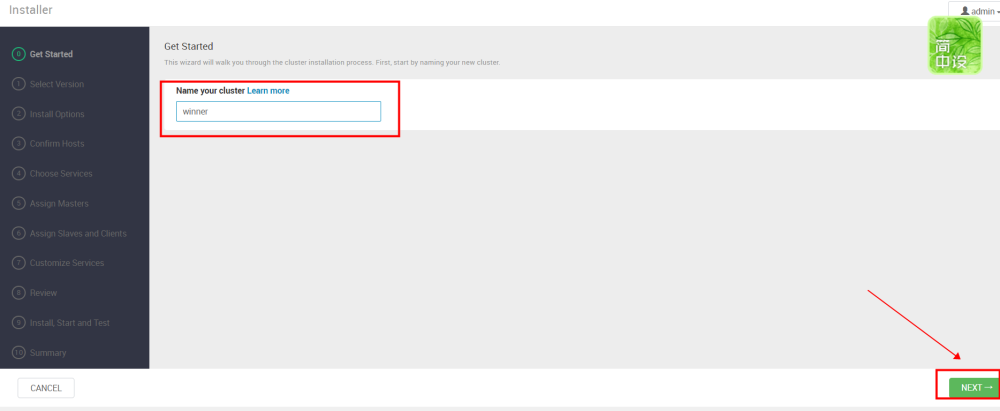

Get Started

输入集群名称 “winner”,点击NEXT

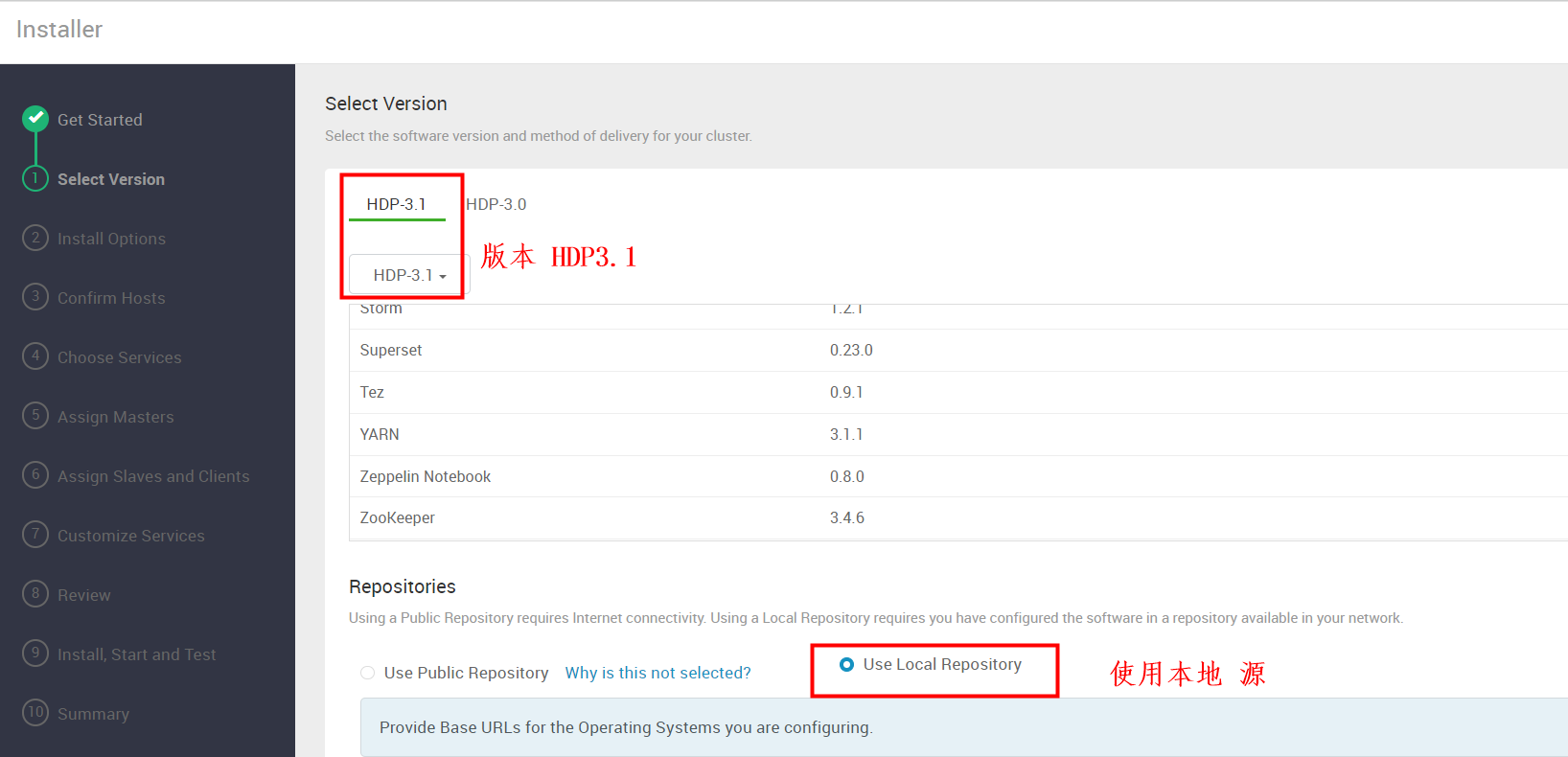

Select Version

选择HDP的版本,这里使用的是3.1版本,repo选择“Use Local Repository”

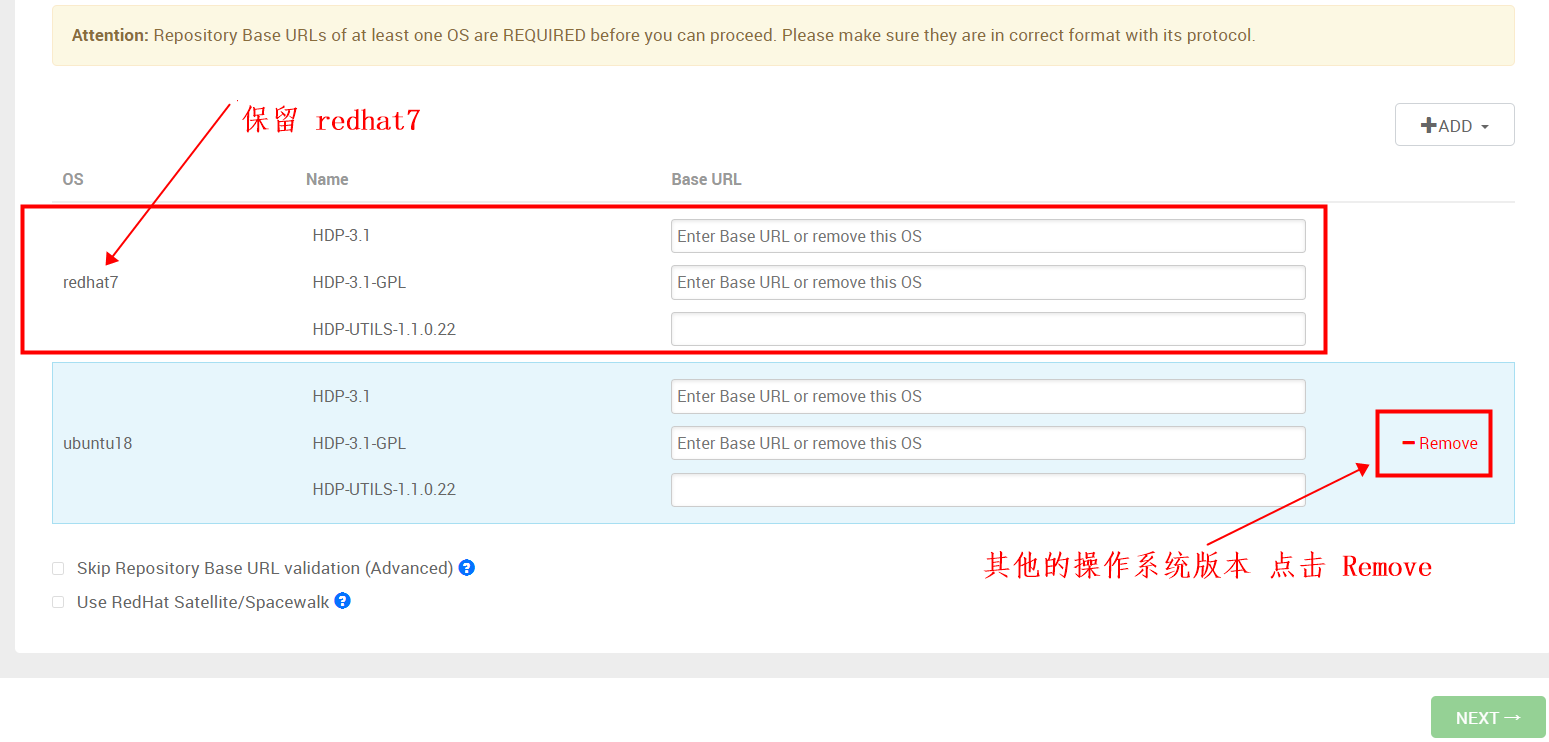

保留redhat7的地址 栏,其它系统选择“Remove”

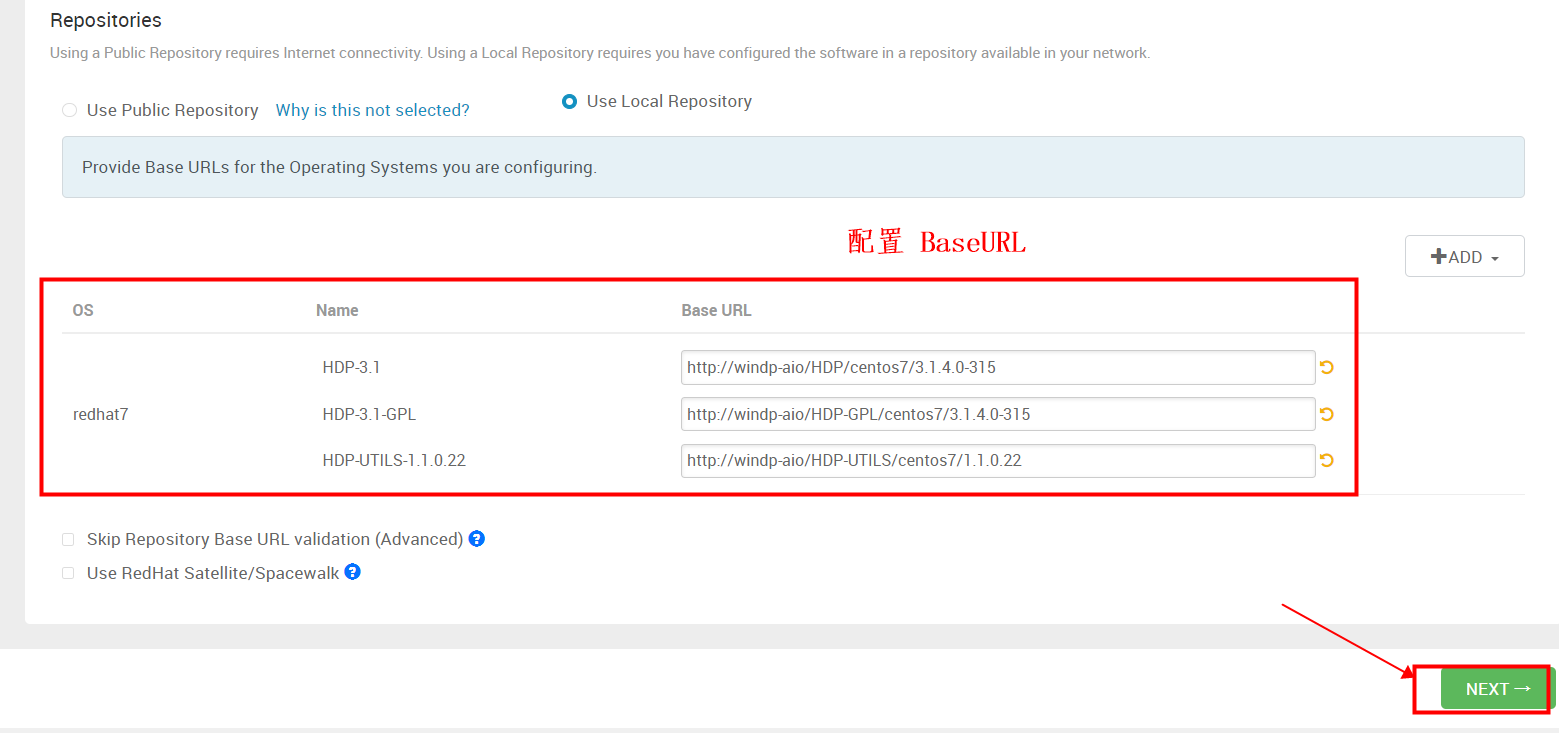

将如下URL 依次复制到Base URL 地址栏中,然后 下一步

http://windp-aio/HDP/centos7/3.1.4.0-315 http://windp-aio/HDP-GPL/centos7/3.1.4.0-315 http://windp-aio/HDP-UTILS/centos7/1.1.0.22

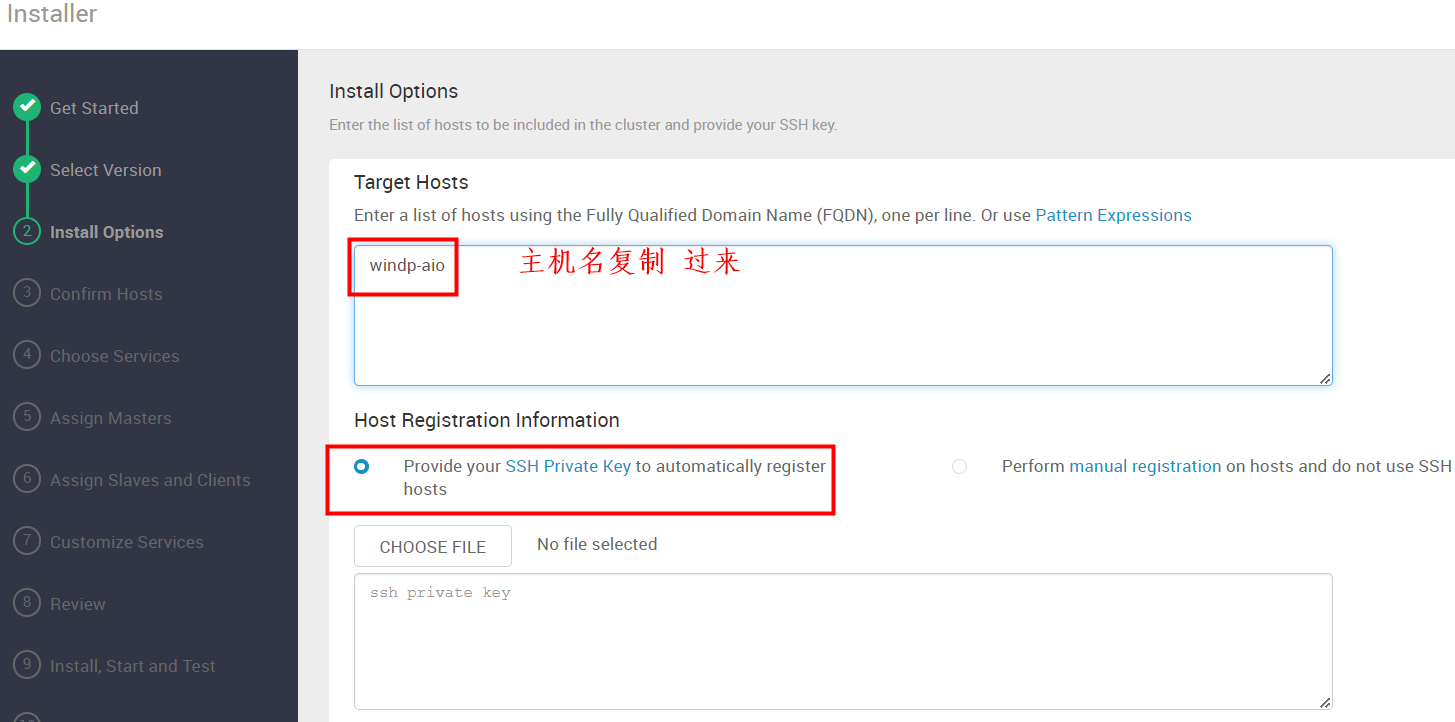

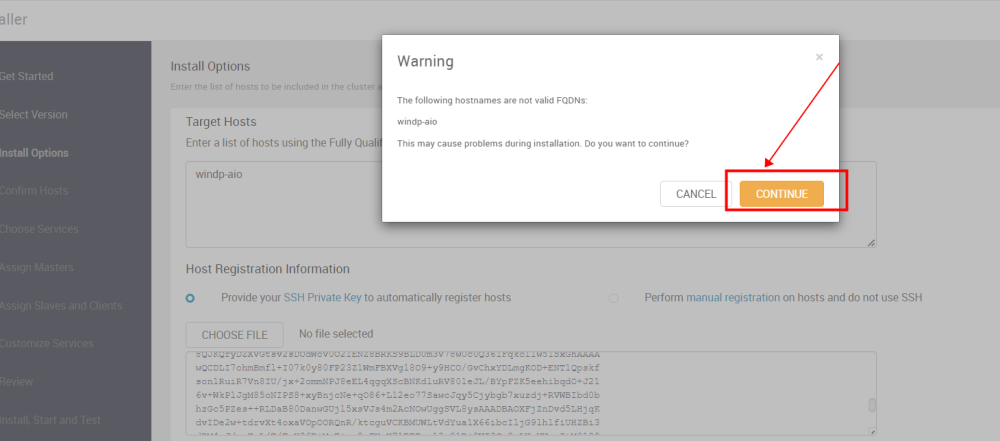

Install Options

- Target Hosts : windp_aio

- Host Registration Information: 选择红框Provide your SSH Private Key to automatically register hosts

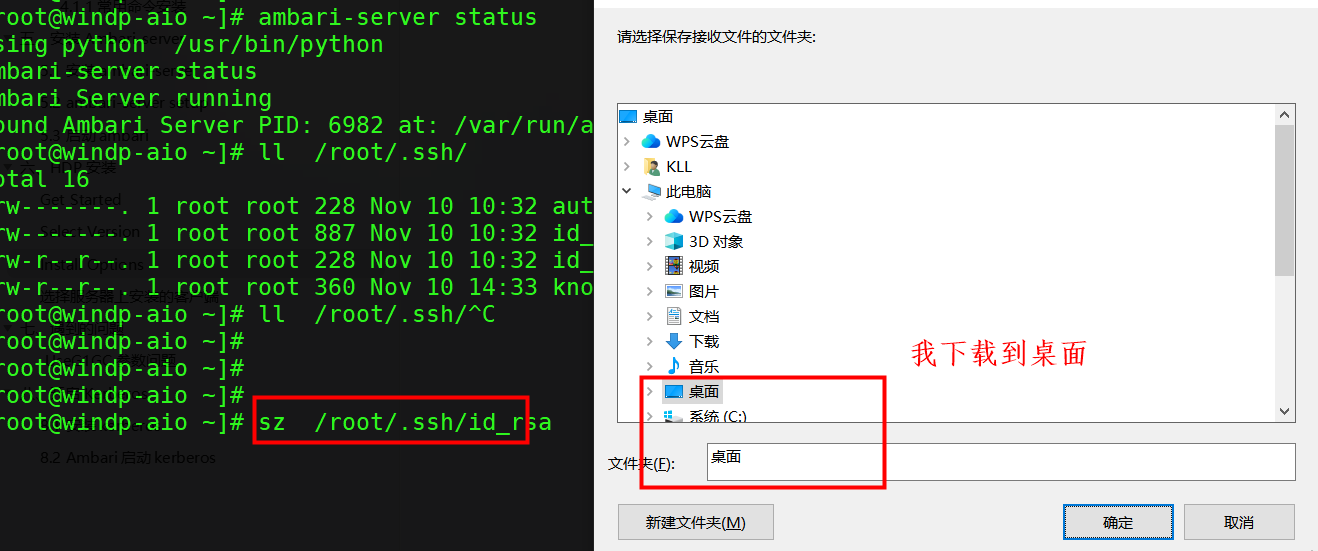

在Linux服务器上执行如下命令将私钥下载到Windows本地

sz /root/.ssh/id_rsa

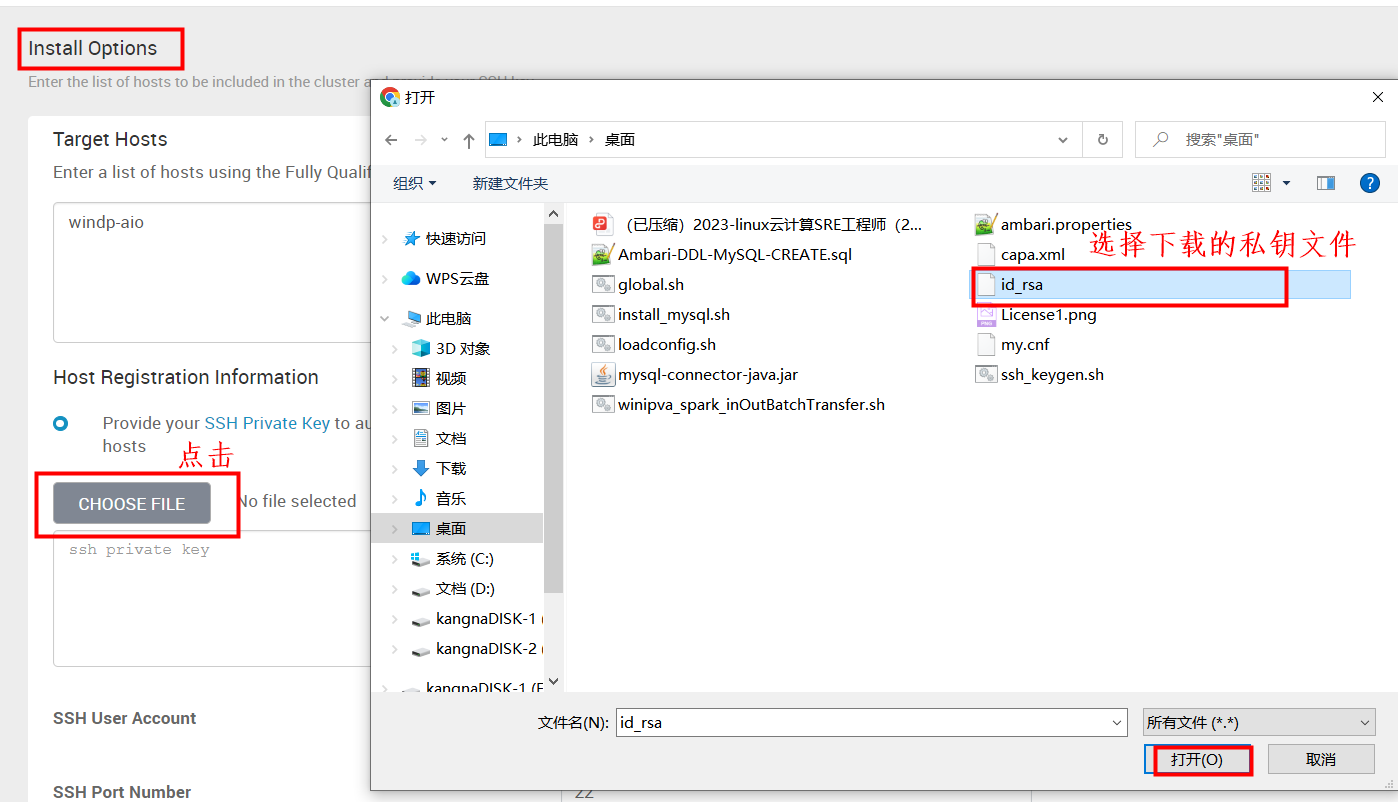

将下载的私钥文件从本地上传,点击选择“CHOOSE FILE”,选中“id_rsa”文件后选择打开。

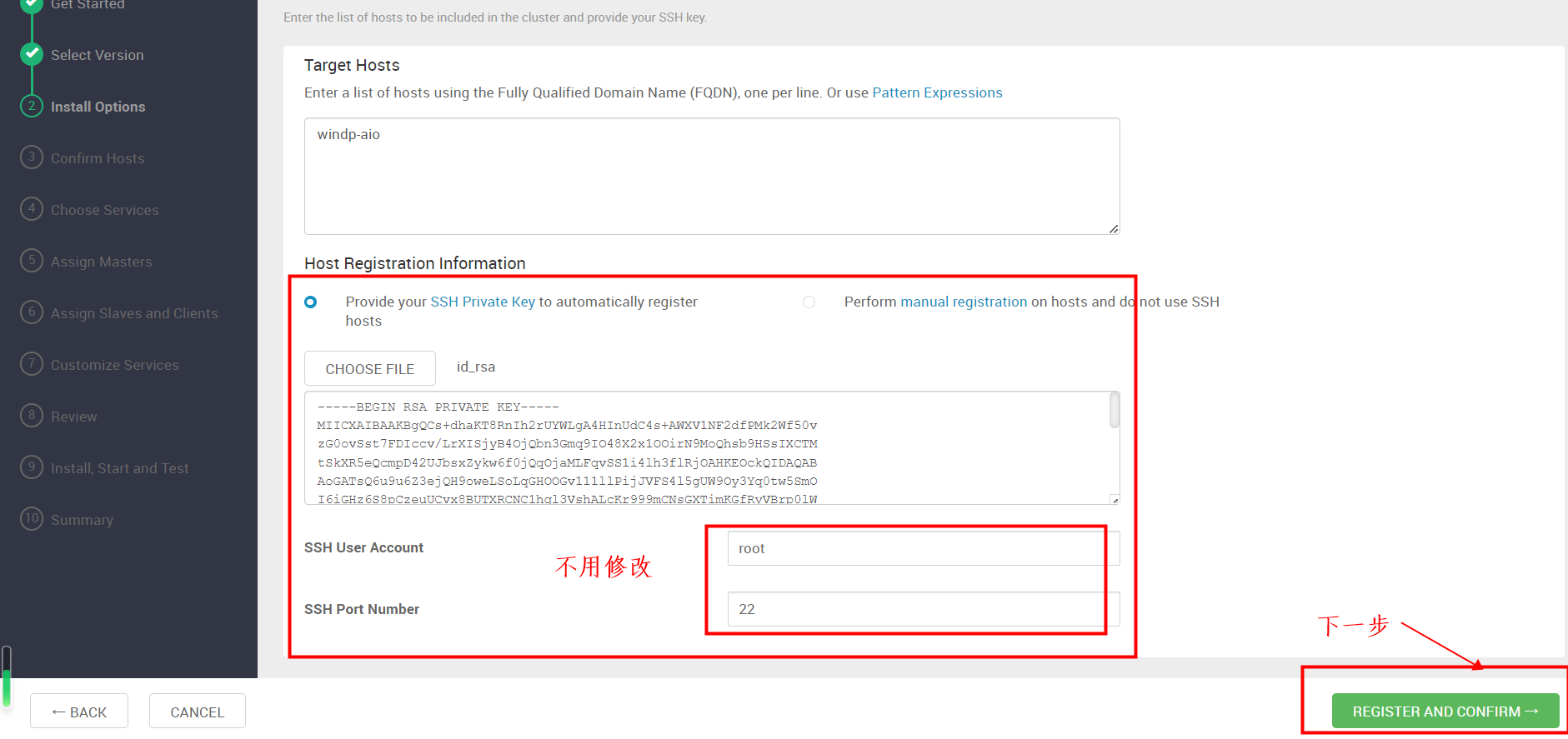

如下图 私钥文件上传完成,ssh的用户和端口采用默认,我们选择下一步

- SSH User Account: root

- SSH Port Number: 22

弹出Warning(一般是主机名不符合规范),可以忽略。

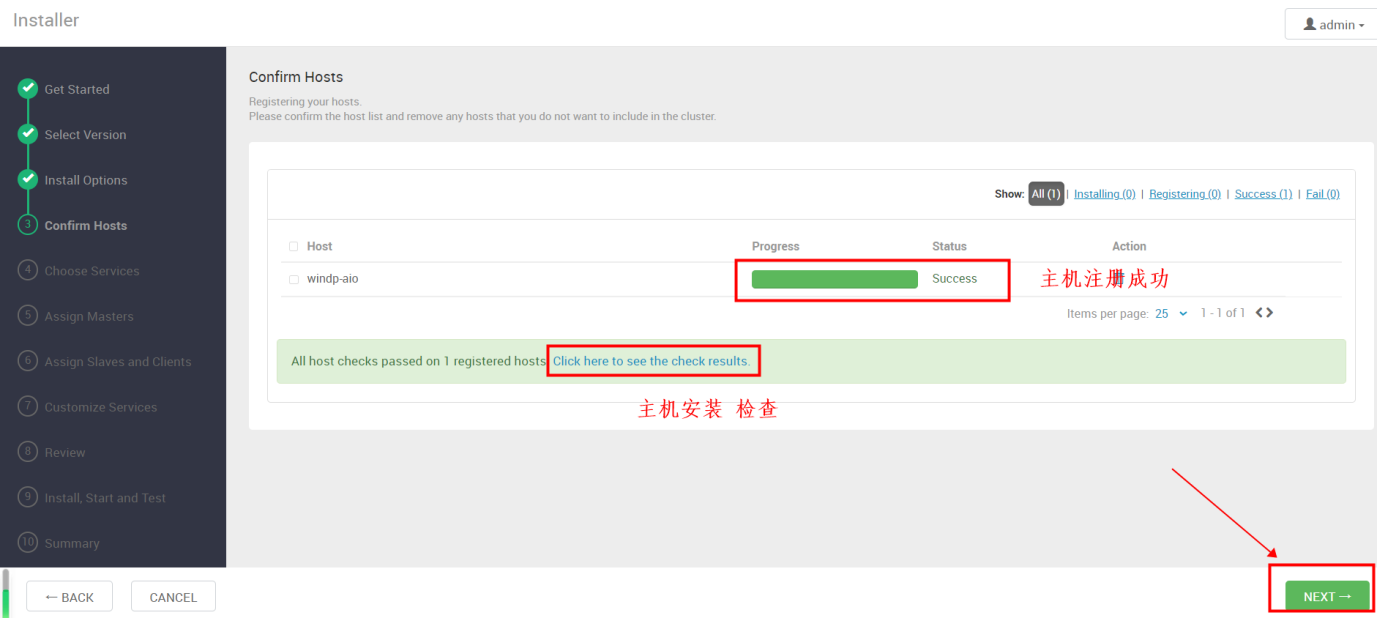

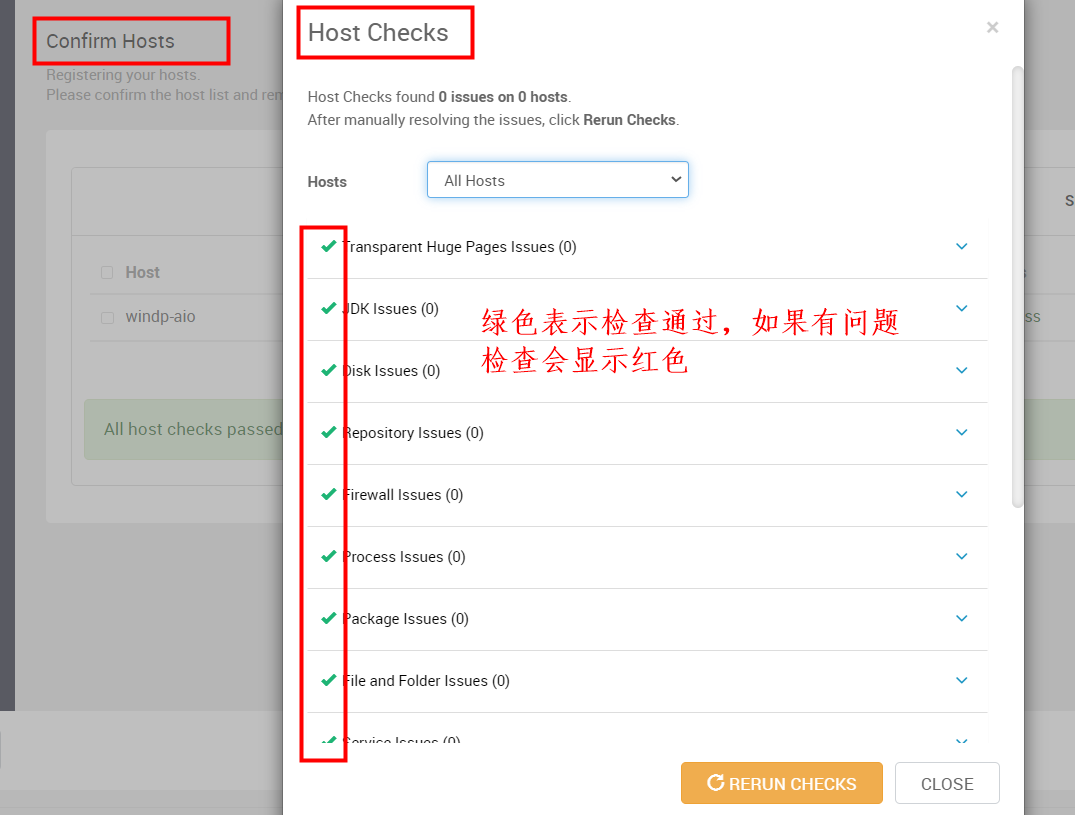

Confirm Hosts

等待服务器注册,如下的 check 信息要检查通过才行,可能会检查出问题,有什么问题就解决什么问题。

点击进入 “ Click here to see the check results.” 可以检查主机检查项是否通过

,如果有问题说明基础环境配置有问题,如果没有问题,选择“CLOSE”,下一步

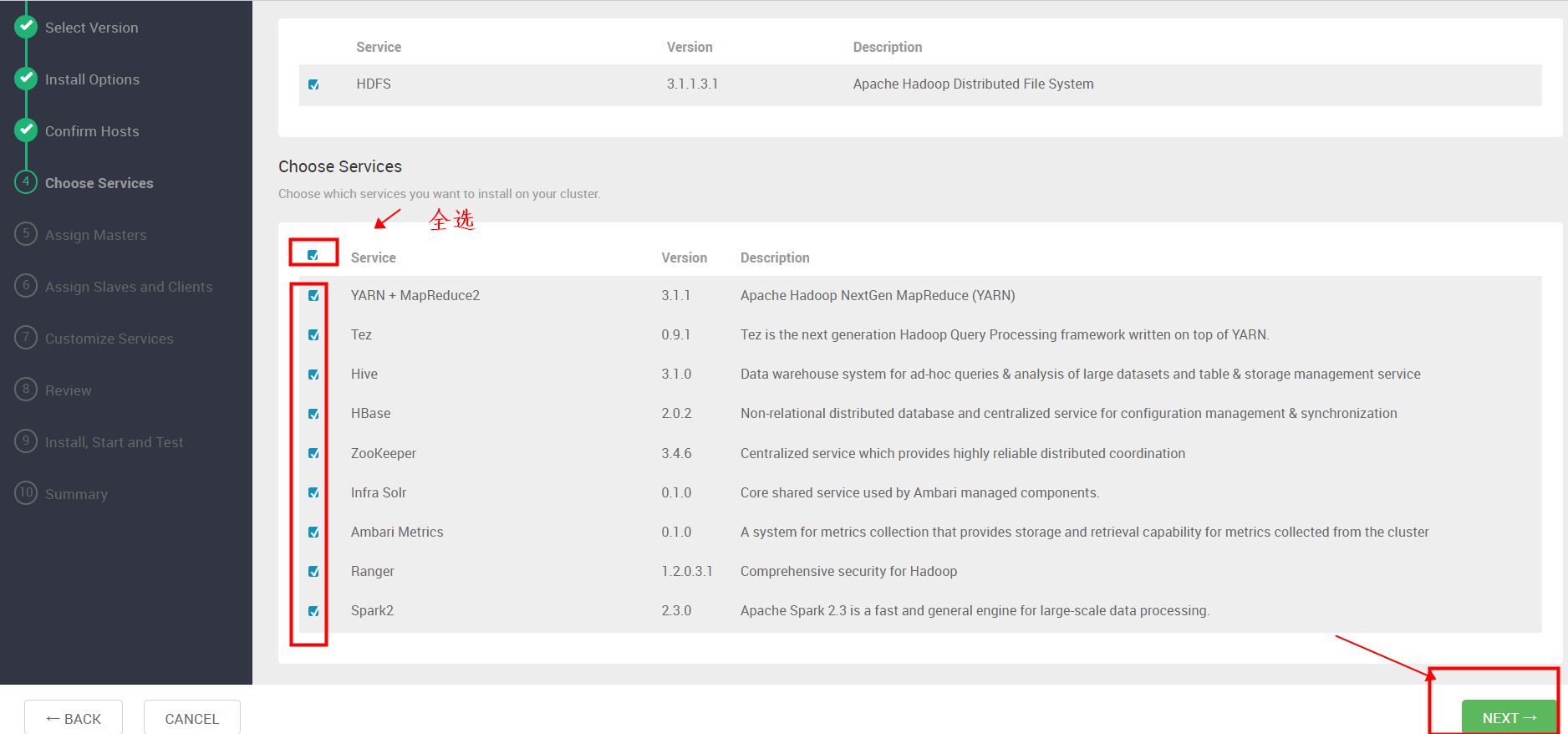

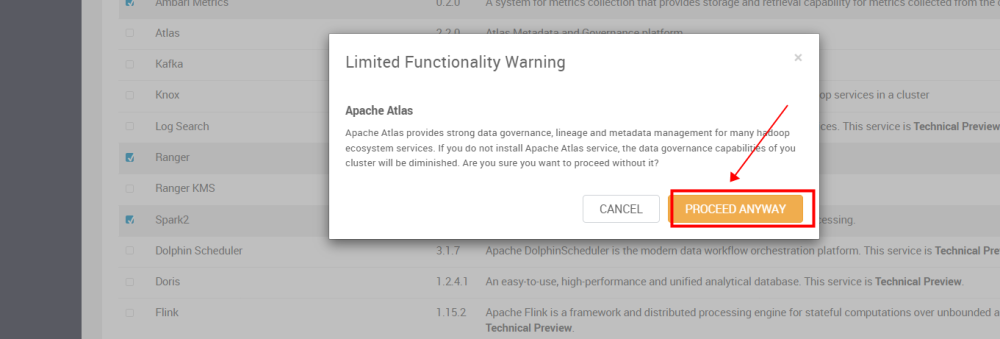

Choose Services

选择要安装的组件: HDFS、YARN + MapReduce2、Tez、Hive、HBase、ZooKeeper、Infra Solr、Ambari Metrics、Ranger、Spark2, 选择完成后下一步

如果有Warning 直接忽略安装

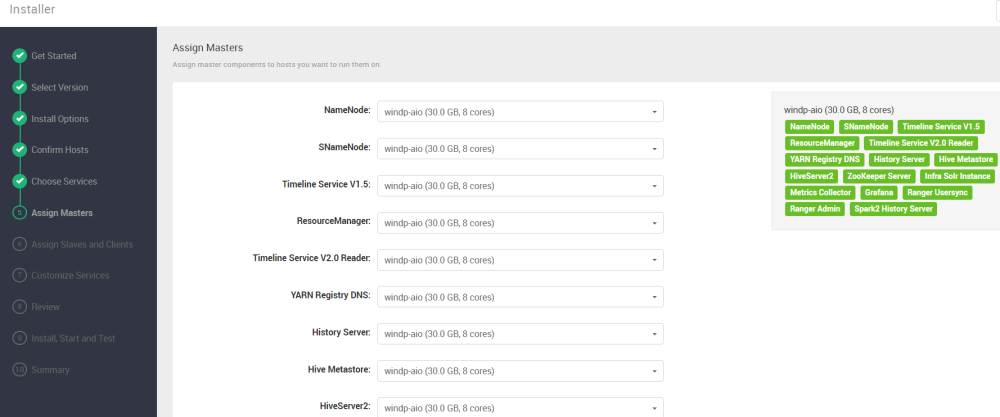

Assign Masters

单机环境,直接下一步

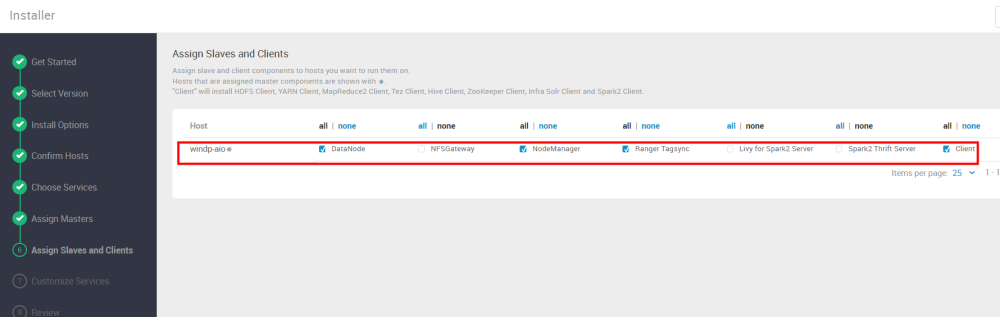

Assign Slaves and Clients

选择服务器上安装的客户端,默认已选择,下一步

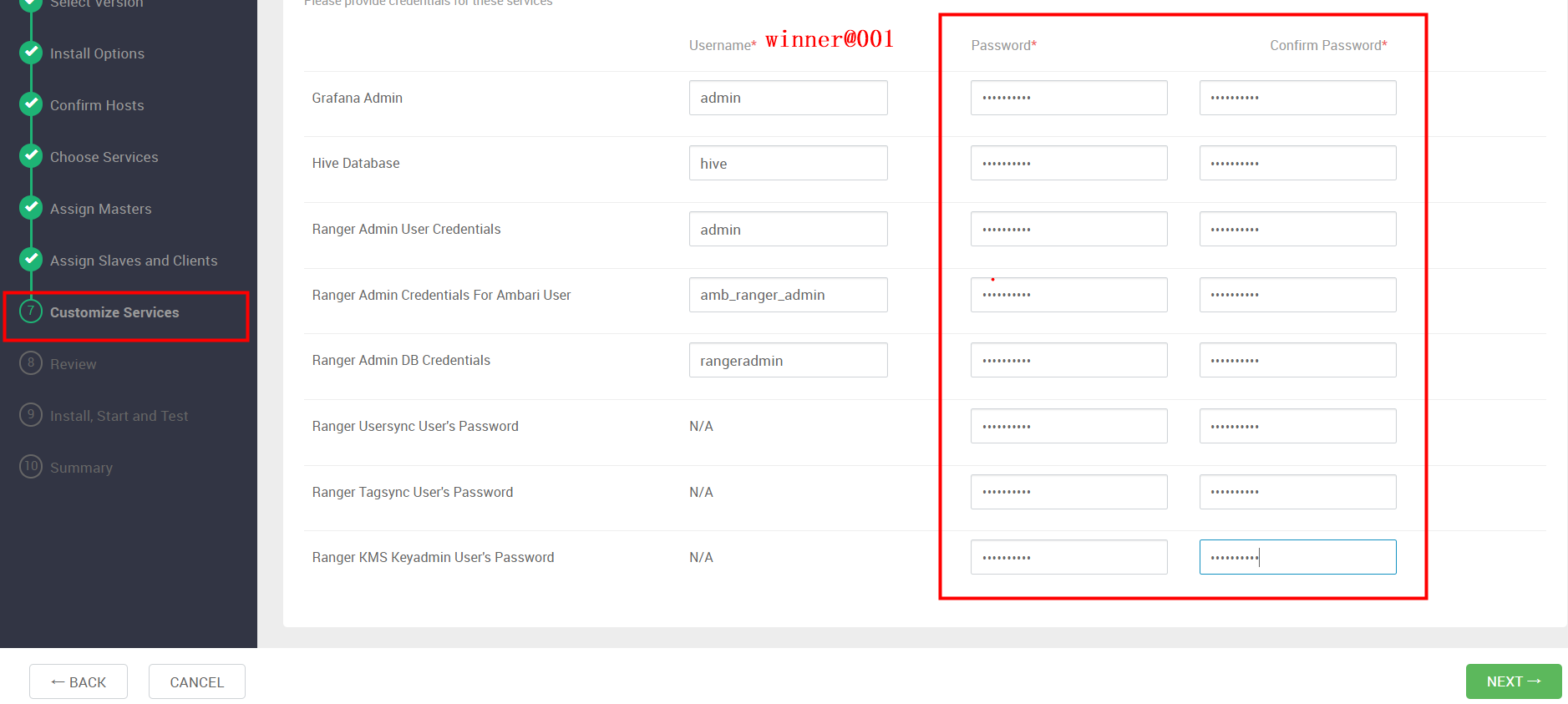

Customize Services

CREDENTIALS

配置密码,建议使用一种好记 , 我们统一使用 “Winner001”,密码复制到所有的“password”框中,密码已存在的框采用覆盖。完成后下一步

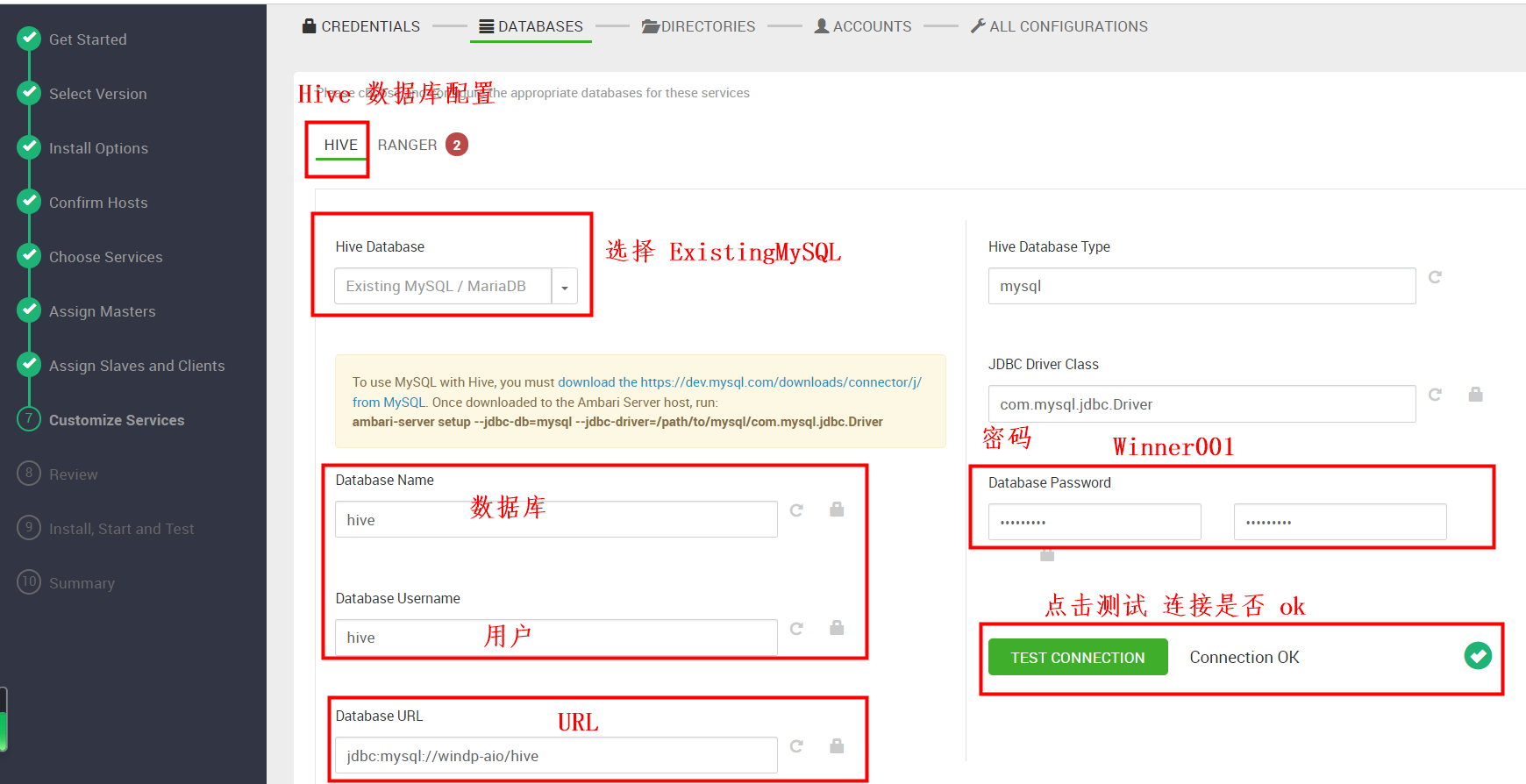

DATABASES

点击选择database,配置hive数据库,确保测试通过,Hive Database 选择 Existing MySQL / MariaDB

- DatabaseName:hive

- Uesr:hive

- Database Password: Winner001

- DatabaseURL:jdbc:mysql://windp-aio/hive

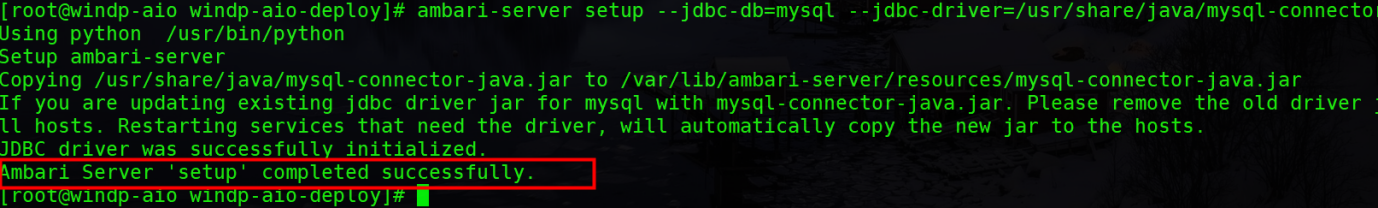

注意:测试连接不通过可以按照提示, 命令行手动设置驱动包的位置,执行如下命令后尝试再次测试连接:

ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

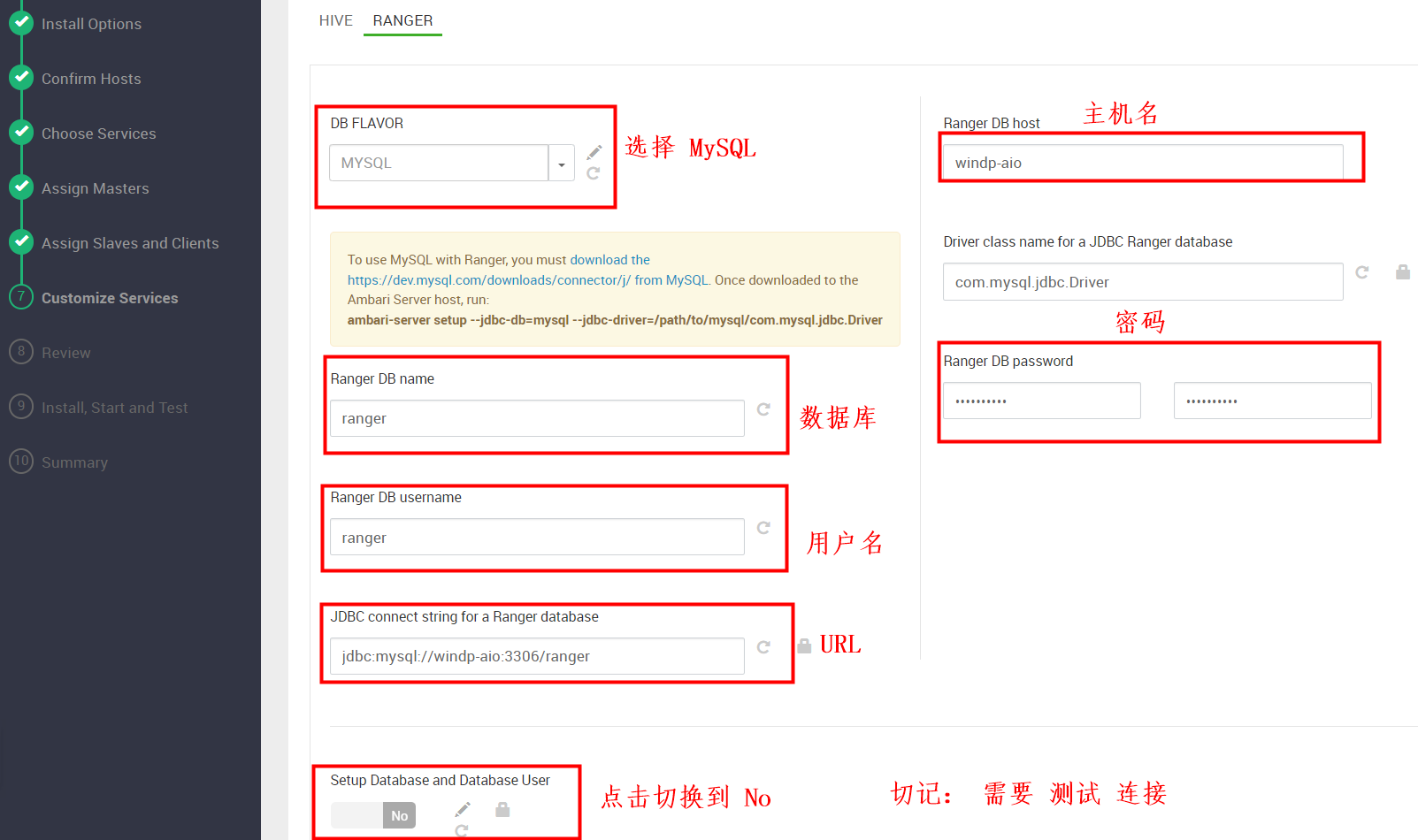

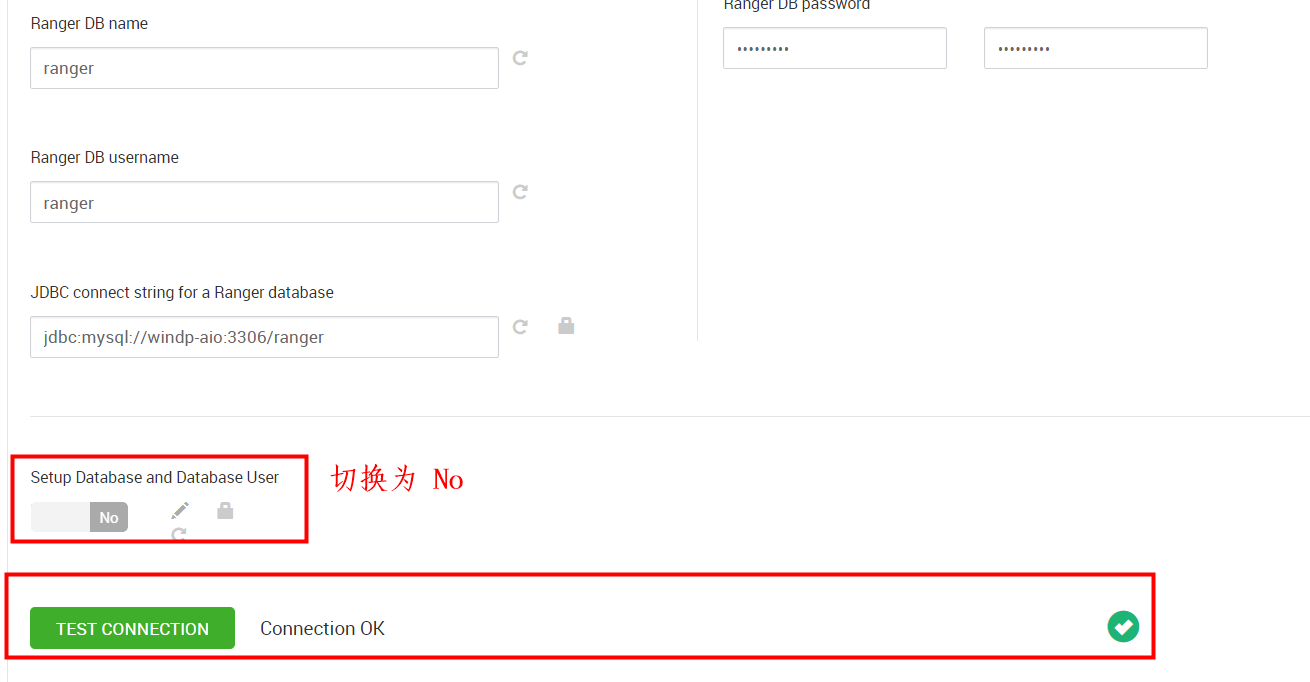

点击选中 “RANGER”,进行ranger数据库配置,确保测试通过

- DatabaseName:ranger

- Uesr:ranger

- Database Password: Winner001

- DatabaseURL:jdbc:mysql://windp-aio/ranger

- Ranger DB host:windp-aio

设置完成后,下一步

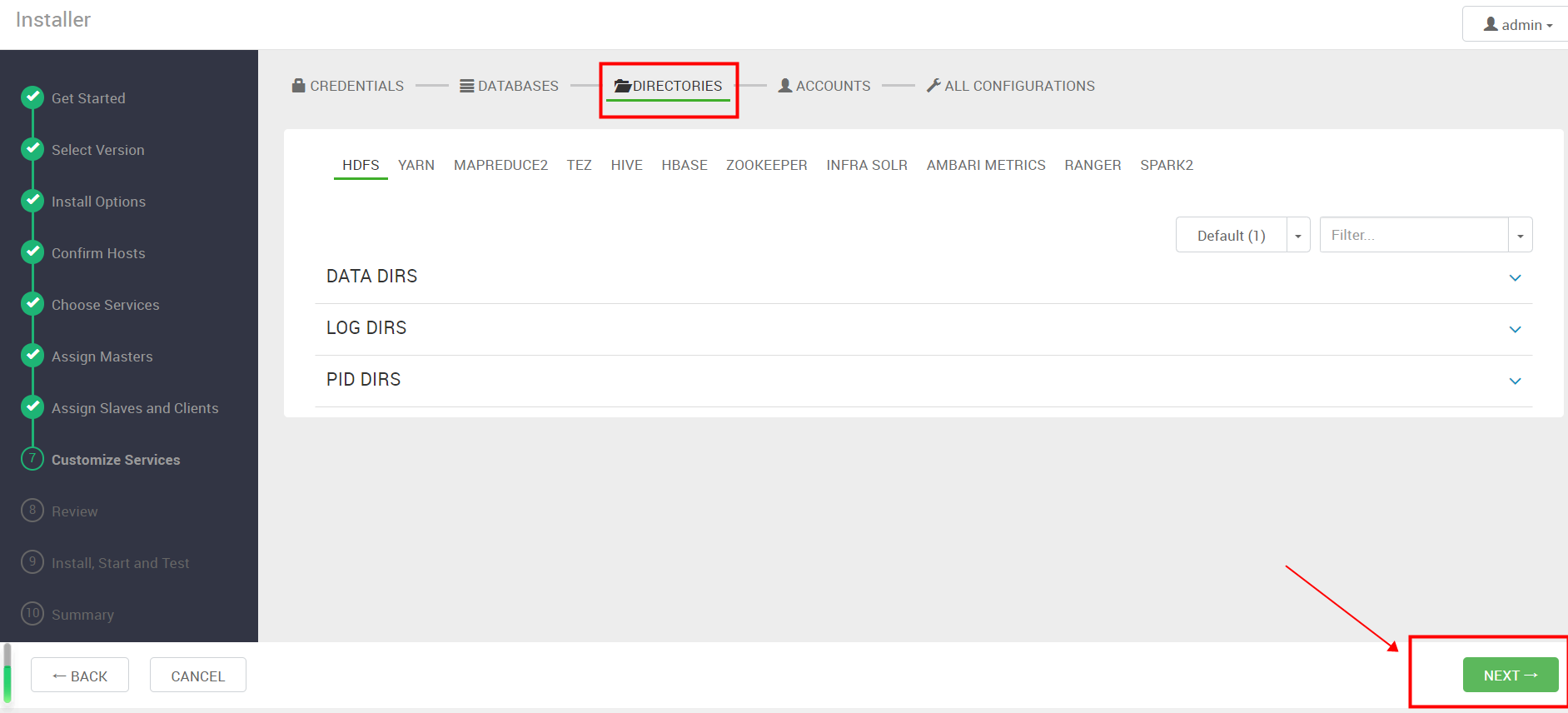

DIRECTORIES

默认配置下一步, 安装完成后我们再对存储路径做进一步规划修改,一般存储数据和日志路径要配置为数据盘目录。

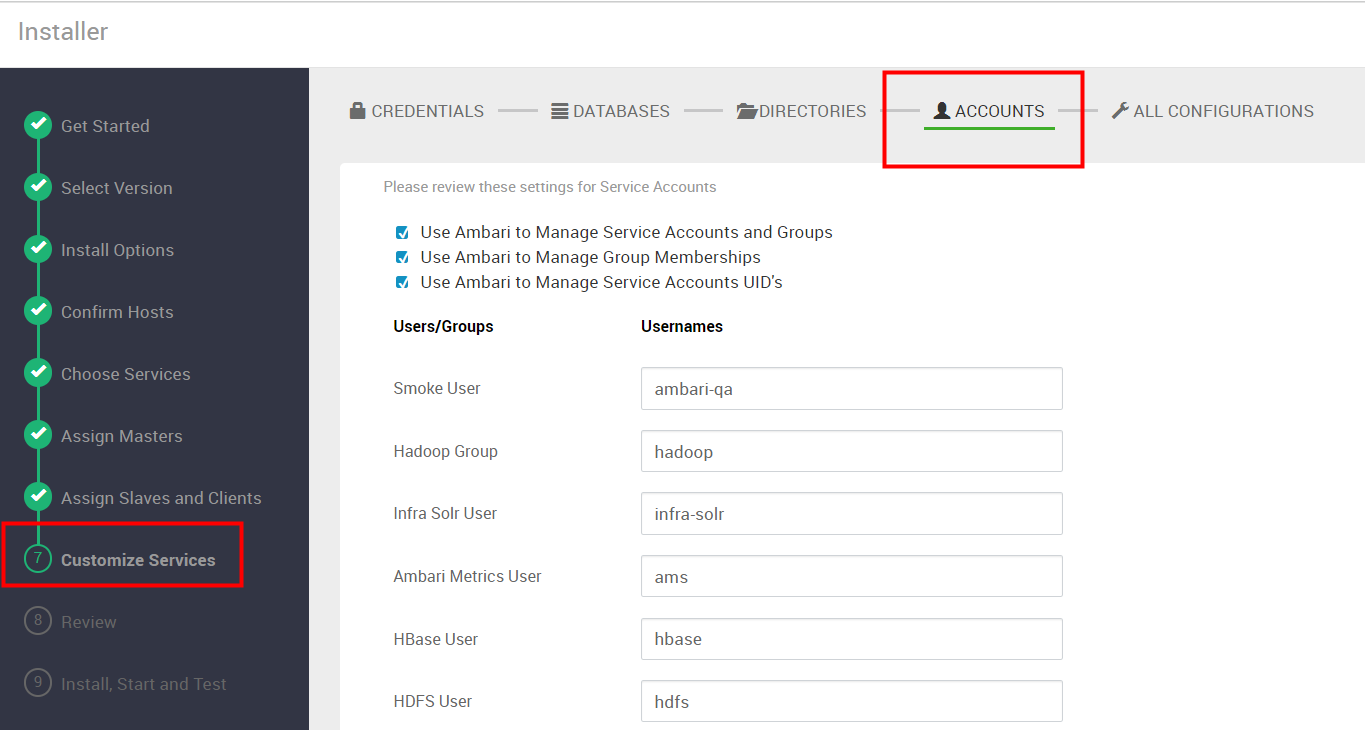

ACCOUNTS

账号信息确定,使用默认配置,直接下一步

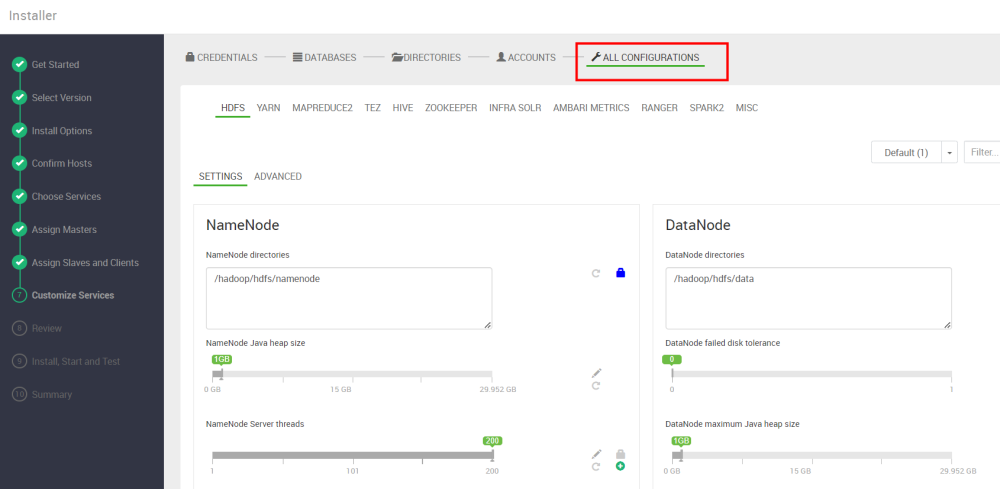

ALL CONFIGURATIONS

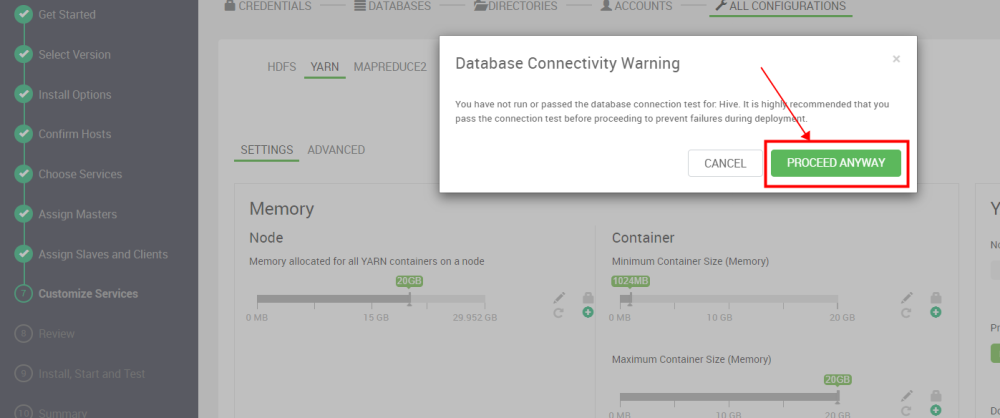

组件参数修改,根据资源大小自行修改,像NameNode ,HBase Master默认都是1G ,根据生产环境资源情况自行修改,我们采用默认,下一步。

确认下一步

Review

点击DEPLOY

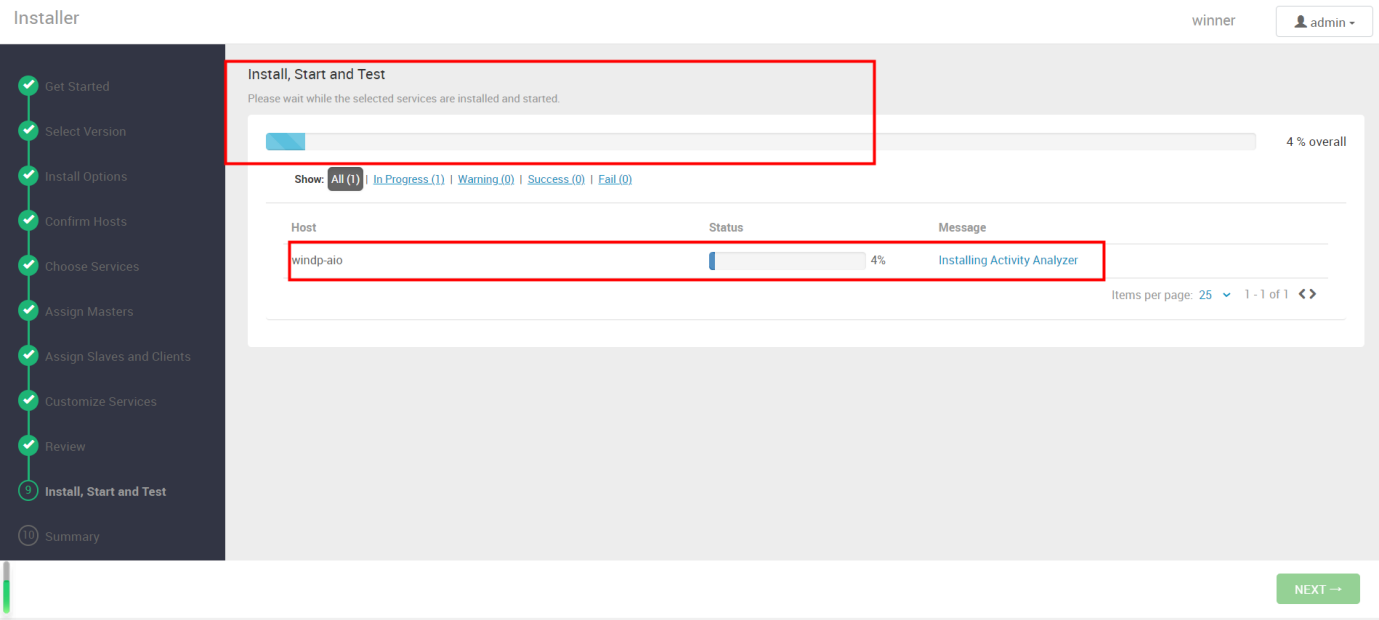

组件安装中,安装需要40分钟左右

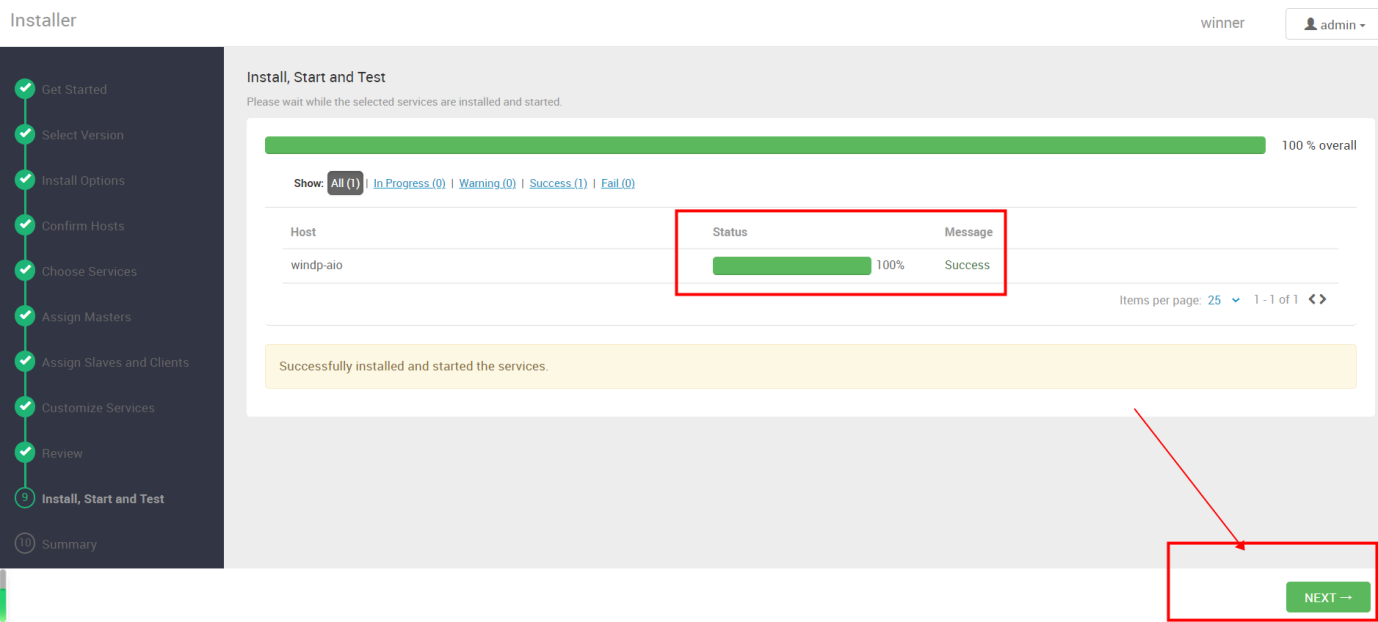

安装完成,下一步, 如果某些组件安装失败我们需要查看日志分析原因。

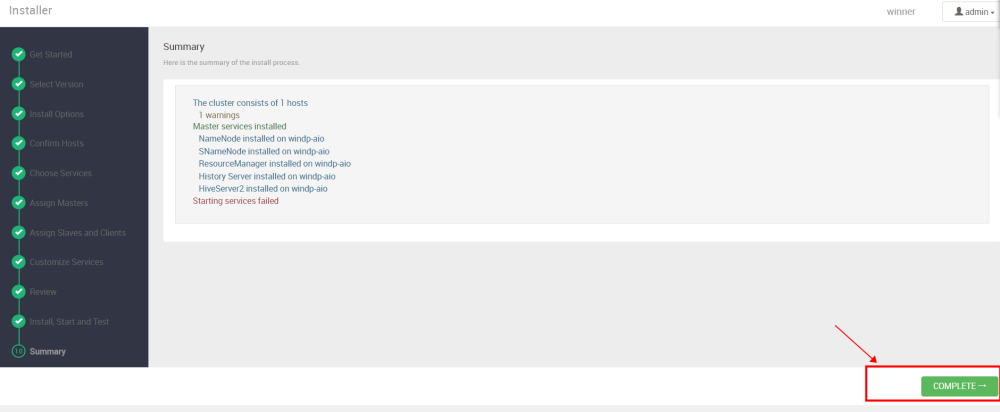

点击 COMPLETE

安装完成,启动失败的组件我们逐个击破就行。

六、开启Kerberos

6.1 kerberos服务检查

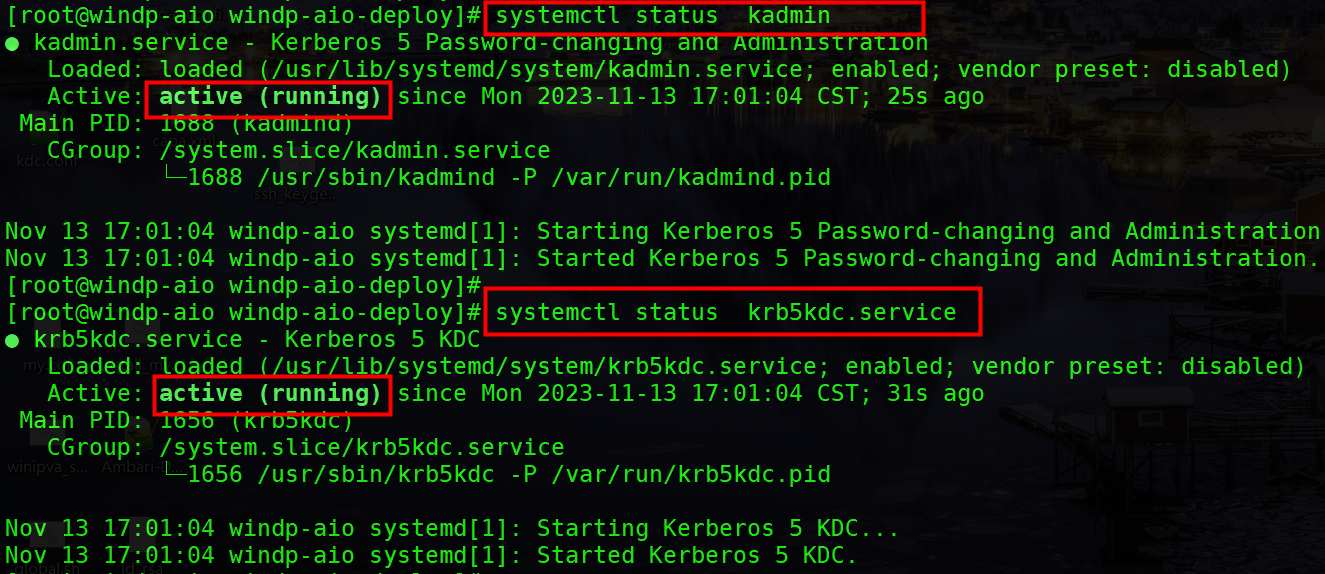

执行如下命令,查看krb5kdc,kadmin服务是否启动成功,显示如图“running”则启动成功。

cd /opt/windp-aio-deploy/ systemctl status krb5kdc.service systemctl status kadmin.service

krb5kdc,kadmin检查这两个服务为 running 状态,如果没有启动成功尝试重启。

如果 没有启动,尝试重启服务命令如下:

cd /opt/windp-aio-deploy/ systemctl restart krb5kdc.service systemctl restart kadmin.service

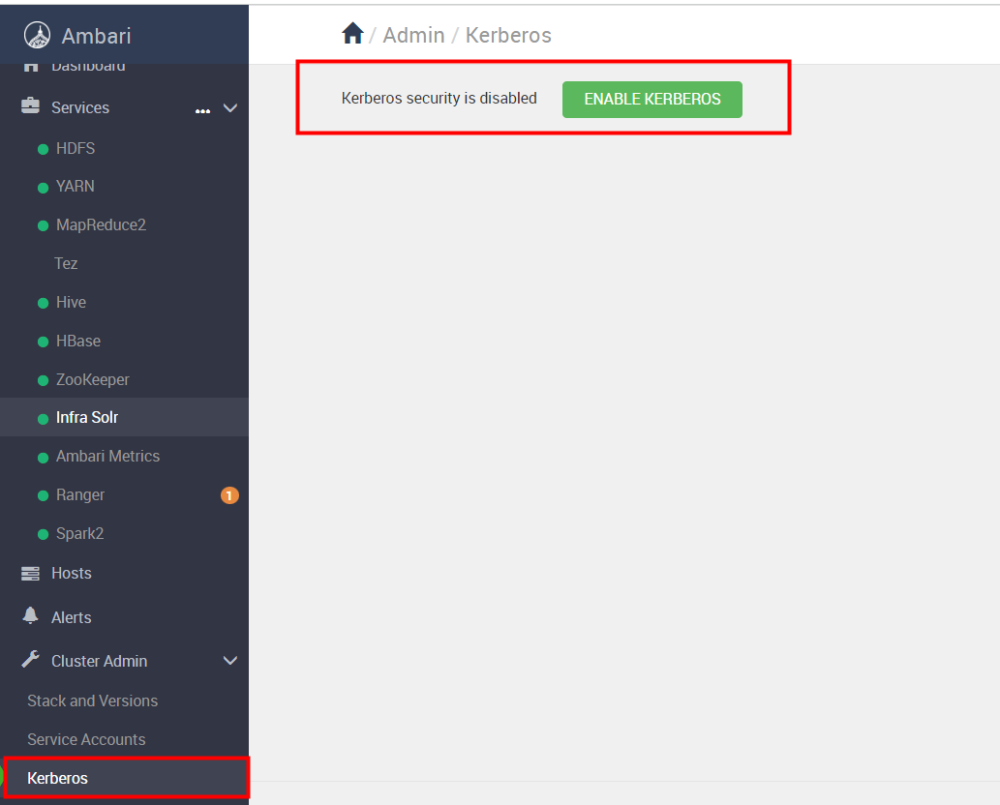

6.2 Ambari启动kerberos

进入Ambari 管理界面,选中启用Kerberos,点击“ENABLE KERBEROS”

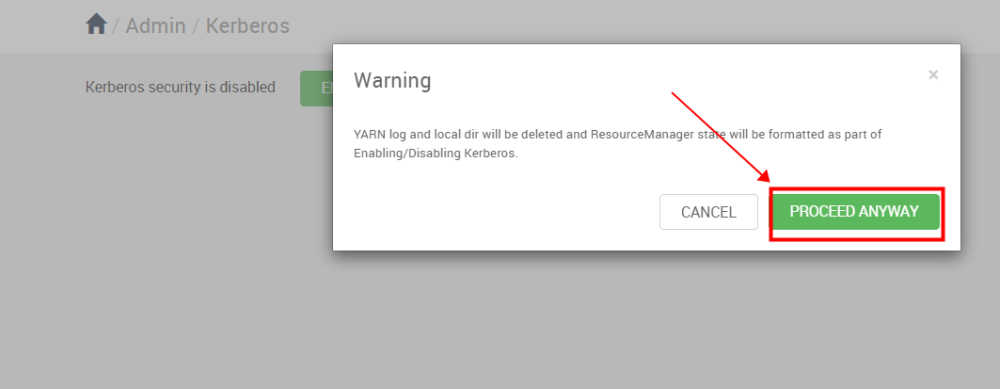

Warning 不用理会,点击“PROCEED ANYWAY”

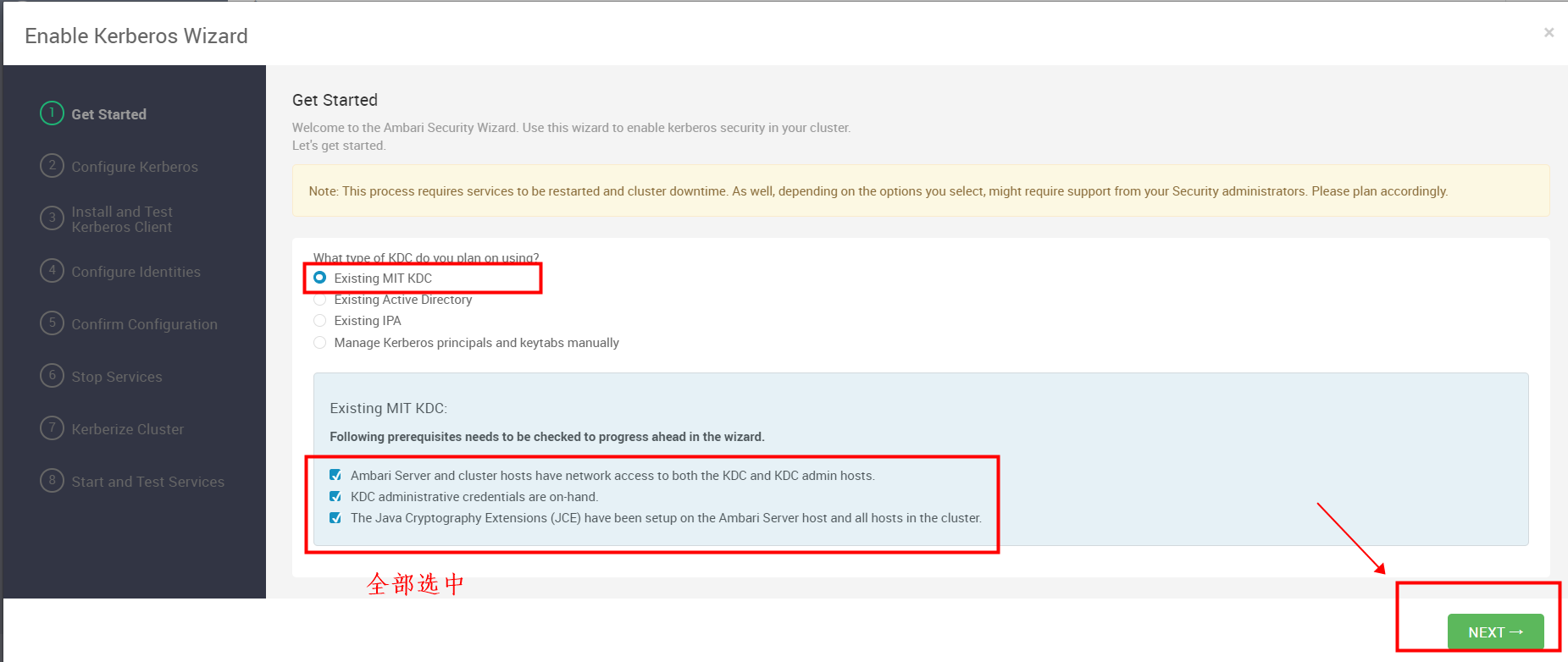

Get Started

如下图选MIT KDC, 下面的三个框我们都要选上,下一步

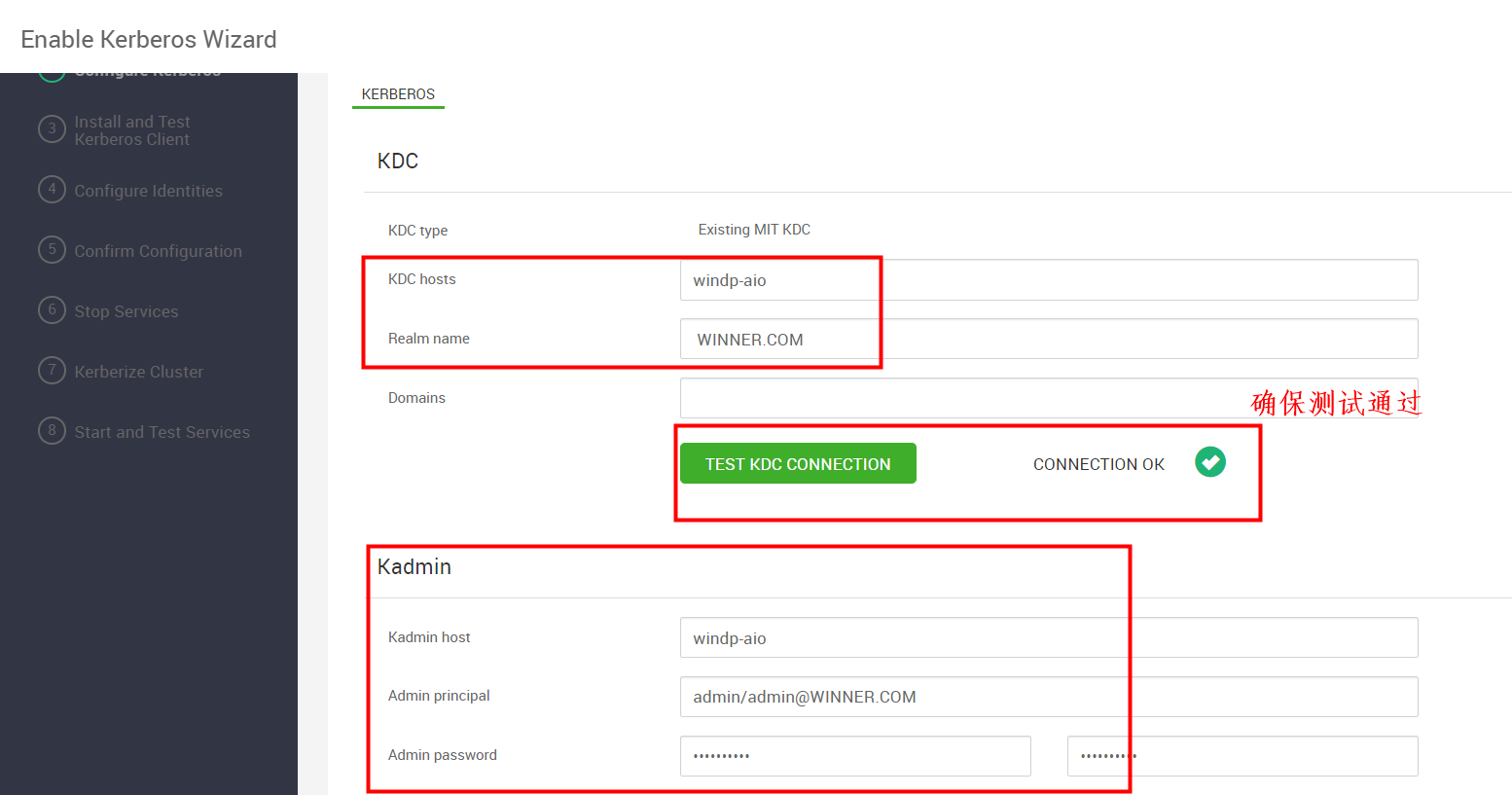

Configure Kerberos

将如下配置复制到对应的位置,确保测试通过,填好之后下一步

- Kadmin host: windp-aio

- Realm name: WINNER.COM

- Admin principal: admin/admin@WINNER.COM

- Admin password: winner@001

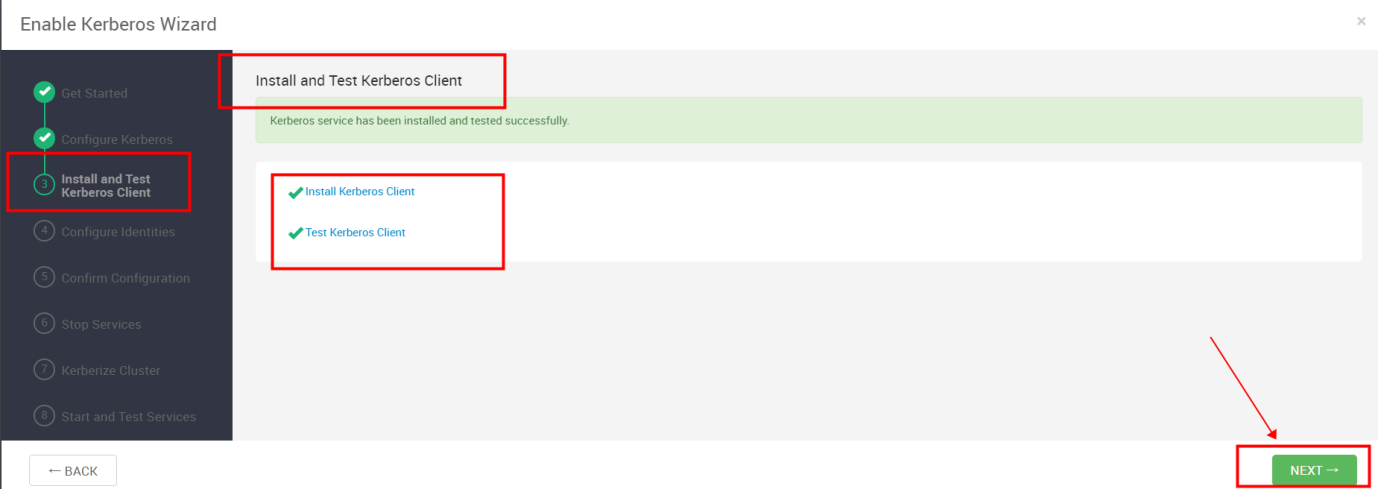

Install and Test Kerberos Client

Kerberos Client 安装和测试完成之后,下一步

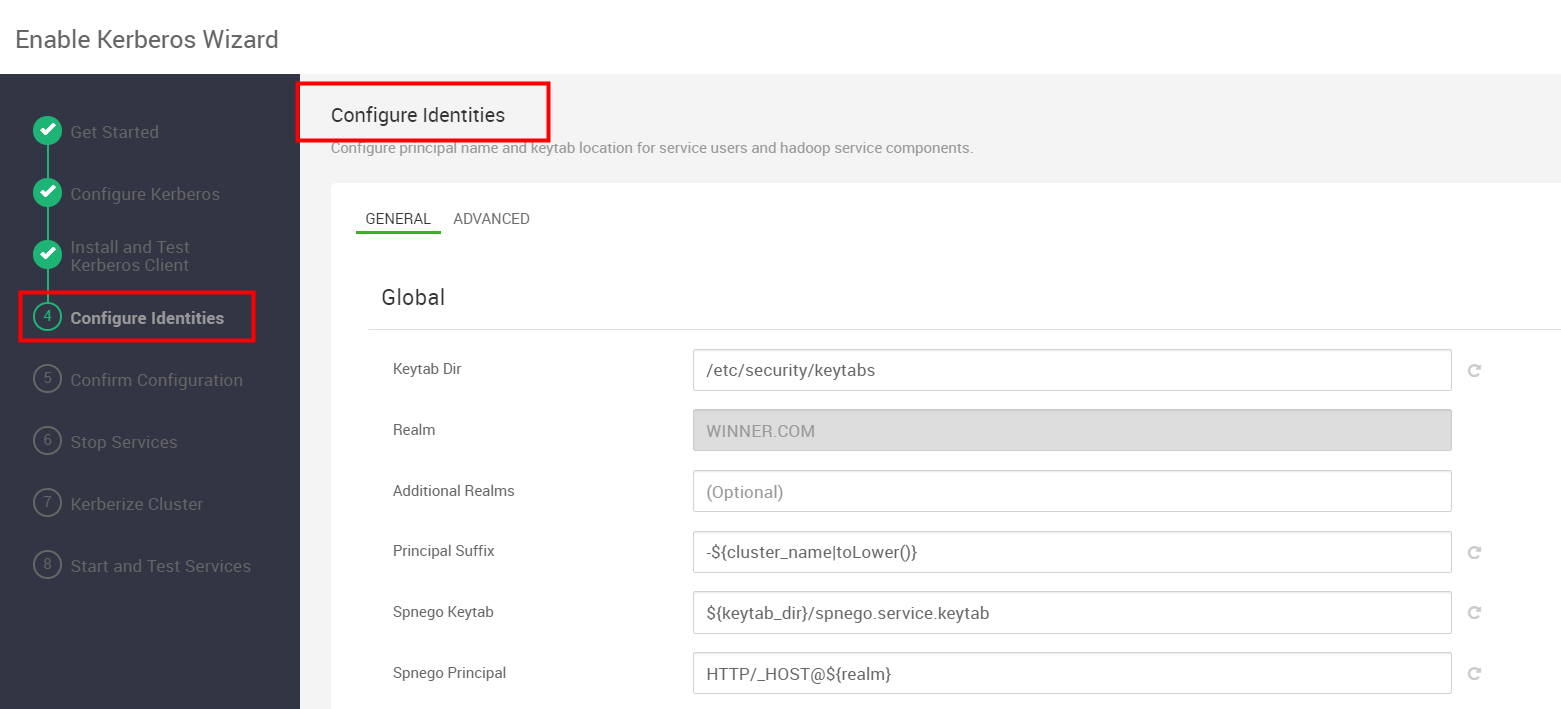

Configure Identities

默认 直接下一步

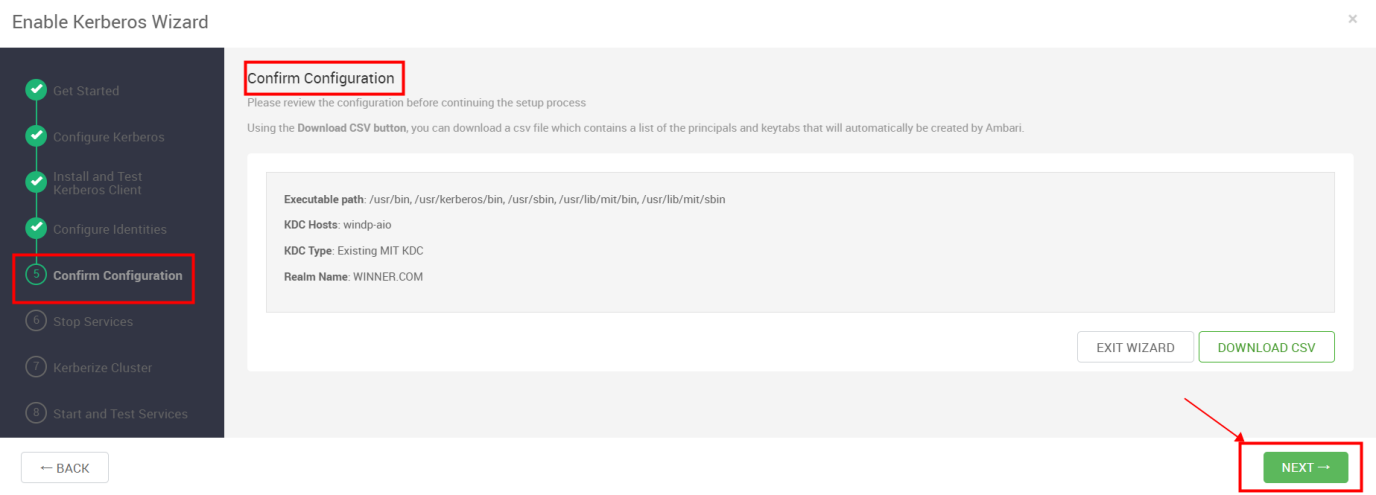

Confirm Configuration

默认直接下一步

Stop Services

停止所有服务,等待执行完成后下一步

Kerberize Cluster

全部通过后下一步 ,如若失败尝试重试解决问题。

Start and Test Services

启动全部服务并进行测试,全部启动成功需要10分钟左右

启动完成 下一步

如果启动失败进行启动尝试,或者没有关系直接点击COMPLETE ,然后查看启动不了的组件,逐个解决。

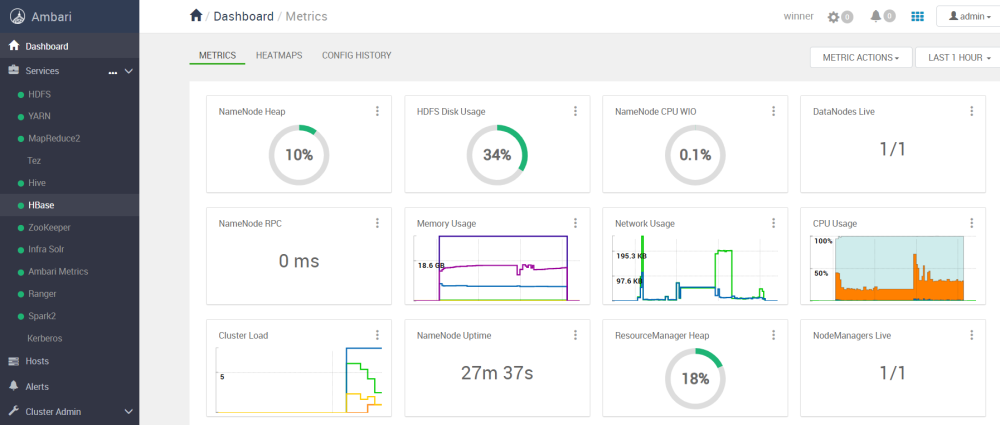

启动完成

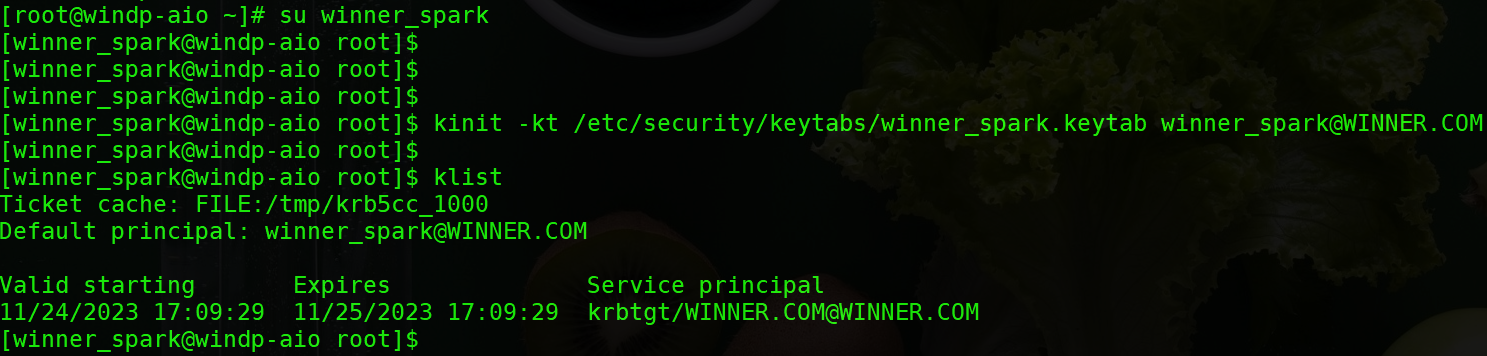

客户端认证

七、脚本附录说明

包结构以及配置文件

install_base.sh

#! /bin/bash # # Author: kangll # CreateTime: 2023-11-10 # Desc: 基础环境配置,包括服务器设置,JDK,免密,kerberos配置 # #set -x BASEDIR=$(cd "$(dirname "$0")"; pwd) # 加载配置 source $BASEDIR/config/global.sh # public 主机名和root密码 ip=$(ip addr show | grep -E 'inet [0-9]' | awk '{print $2}' | awk -F '/' '{print $1}' | sed -n '$p') ssh_hosts=${ip} ssh_networkname=(windp-aio) # global.sh 配置文件中获取 ssh_passwd=$root_passwd kerberos_user=winner_spark ########################### # 配置基本环境 ########################### base_os_config() { # tools sudo yum install expect bash-completion lrzsz tree vim wget net-tools libaio perl -y # hostname sudo hostnamectl set-hostname $ssh_networkname # hosts sudo cat > /etc/hosts << EOF $ip $ssh_networkname EOF ## firewalld disable sudo systemctl stop firewalld sudo systemctl disable firewalld sudo systemctl status firewalld ## selinux disable sudo sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config sudo setenforce 0 ## hugepage cat > /etc/rc.local << EOF if test -f /sys/kernel/mm/transparent_hugepage/defrag; then echo never > /sys/kernel/mm/transparent_hugepage/defrag fi if test -f /sys/kernel/mm/transparent_hugepage/enabled; then echo never > /sys/kernel/mm/transparent_hugepage/enabled fi EOF ## chrony sudo yum install chrony -y sudo systemctl restart chronyd sudo systemctl enable chronyd sudo systemctl status chronyd # httpd sudo yum -y install httpd sudo systemctl restart httpd.service sudo systemctl enable httpd.service sudo systemctl status httpd.service ## kerberos client sudo yum install -y krb5-workstation krb5-libs } ######################## # 生成本地ssh公钥 ######################## create_ssh_pub(){ echo "生成本地ssh公钥" /usr/bin/expect << eof # 设置捕获字符串后,期待回复的超时时间 set timeout 30 spawn ssh-keygen -t rsa -b 1024 ## 开始进连续捕获 expect { ".ssh/id_rsa)" { send "\n"; exp_continue } "Overwrite (y/n)?" { send "y\n"; exp_continue } "no passphrase):" { send "\n"; exp_continue } "passphrase again:" { send "\n"; exp_continue } } eof } ######################## # 定义复制ssh公钥方法 ######################## copy_ssh(){ if [ ! -f /root/.ssh/id_rsa.pub ];then create_ssh_pub fi echo "复制公钥到对应的主机上" /usr/bin/expect << eof # 设置捕获字符串后,期待回复的超时时间 set timeout 30 spawn ssh-copy-id -i /root/.ssh/id_rsa.pub @ ## 开始进连续捕获 expect { "connecting (yes/no)?" { send "yes\n"; exp_continue } "s password:" { send "${ssh_passwd}\n"; exp_continue } } eof } ######################## # 配置免密 ######################## config_ssh() { for name in ${ssh_networkname[*]};do timeout 5 ssh root@${name} "echo ${name}: 'This is success!'" if [[ $? -ne 0 ]];then echo "复制文件到: ${name}" copy_ssh root ${name} > /dev/null fi done echo "********** ssh installation completed **********" } ######################## # 配置JDK环境 ######################## config_java_home() { ## java sudo mkdir -p /usr/java if [ -f $BASEDIR/jdk-8u162-linux-x64.tar.gz ];then tar -zxvf $BASEDIR/jdk-8u162-linux-x64.tar.gz -C /usr/java fi java_home=$(cat /etc/profile | grep 'JAVA_HOME=') if [ -z "$java_home" ]; then echo 'export JAVA_HOME=/usr/java/jdk1.8.0_162' >> /etc/profile echo 'export CLASSPATH=$JAVA_HOME/lib/' >> /etc/profile echo 'export PATH=$PATH:$JAVA_HOME/bin' >> /etc/profile fi source /etc/profile java -version echo "********** JDK installation completed **********" } ######################## # 配置kerberos,安装启动 ######################## config_krb5() { # kerberos server and client sudo yum install krb5-server krb5-libs krb5-workstation -y # config file cat $BASEDIR/config/krb5.conf > /etc/krb5.conf cat $BASEDIR/config/kdc.conf > /var/kerberos/krb5kdc/kdc.conf cat $BASEDIR/config/kadm5.acl > /var/kerberos/krb5kdc/kadm5.acl echo "******* 创建kdc数据库 *********" /usr/bin/expect << eof # 设置捕获字符串后,期待回复的超时时间 set timeout 30 spawn kdb5_util create -s -r WINNER.COM @ ## 开始进连续捕获 expect { "Enter KDC database master key:" { send "${ssh_passwd}\n"; exp_continue } "master key to verify:" { send "${ssh_passwd}\n"; exp_continue } } eof echo "******** 创建admin实例 *********" /usr/bin/expect << eof # 设置捕获字符串后,期待回复的超时时间 set timeout 30 spawn kadmin.local ## 开始进连续捕获 expect { "kadmin.local:" { send "addprinc admin/admin\n"; exp_continue } "Enter password for principal" { send "${ssh_passwd}\n"; exp_continue } "Re-enter password for principal" { send "${ssh_passwd}\n"; } } expect "kadmin.local:" { send "quit\r"; } eof # start kdc and kadmin sudo systemctl restart krb5kdc sudo systemctl enable krb5kdc sudo systemctl restart kadmin sudo systemctl enable kadmin # add linux user useradd winner_spark # keytabs file path mkdir -p /etc/security/keytabs/ echo "********** kerberos installation completed **********" } ################################## # 配置kerberos用户: winner_spark # 生成keytab 文件 ################################## config_kerberos_user() { echo "******** 创建winner_spark用户实例 ********" /usr/bin/expect << eof # 设置捕获字符串后,期待回复的超时时间 set timeout 30 spawn kadmin.local ## 开始进连续捕获 expect { "kadmin.local:" { send "addprinc ${kerberos_user}\n"; exp_continue } "Enter password for principal" { send "${ssh_passwd}\n"; exp_continue } "Re-enter password for principal" { send "${ssh_passwd}\n"; } } expect "kadmin.local:" { send "quit\r"; } eof echo "******** winner_spark用户生成keytab文件 ********" /usr/bin/expect << eof # 设置捕获字符串后,期待回复的超时时间 set timeout 30 spawn kadmin.local ## 开始进连续捕获 expect { "kadmin.local:" { send "xst -k /etc/security/keytabs/${kerberos_user}.keytab ${kerberos_user}@WINNER.COM\n"; } } expect "kadmin.local:" { send "quit\r"; } eof sleep 2s # modify keytab file privilege chown ${kerberos_user}:${kerberos_user} /etc/security/keytabs/${kerberos_user}.keytab echo "********** kerberos user winner_spark add completed **********" } # 配置基础环境 base_os_config # 配置 root用户免密 config_ssh # 配置JDK config_java_home # 配置kerberos,并启动 config_krb5 # 配置kerberos用户: winner_spark, 生成keytab 文件 config_kerberos_userinstall_mysql.sh

#! /bin/bash # # Author: kangll # CreateTime: 2023-11-10 # Desc: install mysql5.7.27 # echo "******** INSTALL MYSQL *********" #################################### BASEDIR=$(cd "$(dirname "$0")"; pwd) # 加载数据库默认连接信息 source $BASEDIR/config/global.sh install_path=$mysql_install_path hostname=`"hostname"` ##################################### # 卸载原有的mariadb OLD_MYSQL=`rpm -qa|grep mariadb` profile=/etc/profile for mariadb in $OLD_MYSQL do rpm -e --nodeps $mariadb done # 删除原有的my.cnf sudo rm -rf /etc/my.cnf #添加用户组 用户 sudo groupadd mysql sudo useradd -g mysql mysql # 解压mysql包并修改名称 tar -zxvf $BASEDIR/mysql-5.7.44-el7-x86_64.tar.gz -C $install_path sudo mv $install_path/mysql-5.7.44-el7-x86_64 $install_path/mysql # 更改所属的组和用户 sudo chown -R mysql $install_path/mysql sudo chgrp -R mysql $install_path/mysql sudo mkdir -p $install_path/mysql/data sudo mkdir -p $install_path/mysql/log sudo chown -R mysql:mysql $install_path/mysql/data # 粘贴配置文件my.cnf 内容见八 中的 my.cnf cp -f $BASEDIR/config/my.cnf $install_path/mysql/ # 安装mysql $install_path/mysql/bin/mysql_install_db --user=mysql --basedir=$install_path/mysql/ --datadir=$install_path/mysql/data/ # 设置文件及目录权限: cp $install_path/mysql/support-files/mysql.server /etc/init.d/mysqld sudo chown 777 $install_path/mysql/my.cnf sudo chmod +x /etc/init.d/mysqld sudo mkdir /var/lib/mysql sudo chmod 777 /var/lib/mysql # 启动mysql /etc/init.d/mysqld start # 设置开机启动 chkconfig --level 35 mysqld on chmod +x /etc/rc.d/init.d/mysqld chkconfig --add mysqld # 修改环境变量 # 行数需根据实际情况修改 #sed '78s/$/&:\/usr\/local\/mysql\/bin/' -i $profile ln -s $install_path/mysql/bin/mysql /usr/bin ln -s /var/lib/mysql/mysql.sock /tmp/ cat > /etc/profile.d/mysql.sh<如下是repo源的配置还有Ambari的配置启动

install_repo.sh

#! /bin/bash # # Author: kangll # CreateTime: 2023-11-10 # Desc: 配置HDP repo # set -x BASEDIR=$(cd "$(dirname "$0")"; pwd) # source $BASEDIR/config/global.sh # HDP tar install path config_path=$install_path tar_name=$hdp_tar_name ########################### # 配置 HDP repo ########################### config_repo() { mkdir -p $config_path if [ -f $BASEDIR/$tar_name ];then tar -zxvf $BASEDIR/$tar_name -C $config_path fi sudo ln -s $config_path/hdp/ambari /var/www/html/ambari sudo ln -s $config_path/hdp/HDP /var/www/html/HDP sudo ln -s $config_path/hdp/HDP-GPL /var/www/html/HDP-GPL sudo ln -s $config_path/hdp/HDP-UTILS /var/www/html/HDP-UTILS sudo cp -f $BASEDIR/repo/* /etc/yum.repos.d/ sudo yum clean all sudo yum makecache sudo yum repolist echo "********** repo installation completed **********" } ########################### # 初始化db ########################### config_db() { mysql -h${myurl} -u${myuser} -p${mypwd} < $BASEDIR/config/init_db.sql mysql -h${myurl} -u${myuser} -p${mypwd} ambari < $BASEDIR/config/Ambari-DDL-MySQL-CREATE.sql } ########################### # install ambari ########################### install_ambari() { sudo yum install ambari-server -y sudo mkdir -p /usr/share/java/ cp $BASEDIR/config/mysql-connector-java.jar /usr/share/java/ cat $BASEDIR/config/ambari.properties > /etc/ambari-server/conf/ambari.properties cp $BASEDIR/config/password.dat /etc/ambari-server/conf/ ambari-server restart ambari-server status } ########################### # 修改服务 ambari,如下服务默认不安装,选择隐藏 ########################### config_metainfo_modify(){ stack_path=/var/lib/ambari-server/resources/stacks/HDP cat $BASEDIR/repo/services/ACCUMULO/metainfo.xml > $stack_path/3.0/services/ACCUMULO/metainfo.xml cat $BASEDIR/repo/services/KAFKA/metainfo.xml > $stack_path/3.1/services/KAFKA/metainfo.xml cat $BASEDIR/repo/services/PIG/metainfo.xml > $stack_path/3.1/services/PIG/metainfo.xml cat $BASEDIR/repo/services/DRUID/metainfo.xml > $stack_path/3.0/services/DRUID/metainfo.xml cat $BASEDIR/repo/services/LOGSEARCH/metainfo.xml > $stack_path/3.0/services/LOGSEARCH/metainfo.xml cat $BASEDIR/repo/services/SUPERSET/metainfo.xml > $stack_path/3.0/services/SUPERSET/metainfo.xml cat $BASEDIR/repo/services/ATLAS/metainfo.xml > $stack_path/3.1/services/ATLAS/metainfo.xml cat $BASEDIR/repo/services/ZEPPELIN/metainfo.xml > $stack_path/3.0/services/ZEPPELIN/metainfo.xml cat $BASEDIR/repo/services/STORM/metainfo.xml > $stack_path/3.0/services/STORM/metainfo.xml cat $BASEDIR/repo/services/RANGER_KMS/metainfo.xml > $stack_path/3.1/services/RANGER_KMS/metainfo.xml cat $BASEDIR/repo/services/OOZIE/metainfo.xml > $stack_path/3.0/services/OOZIE/metainfo.xml cat $BASEDIR/repo/services/KNOX/metainfo.xml > $stack_path/3.1/services/KNOX/metainfo.xml cat $BASEDIR/repo/services/SQOOP/metainfo.xml > $stack_path/3.0/services/SQOOP/metainfo.xml cat $BASEDIR/repo/services/SMARTSENSE/metainfo.xml > $stack_path/3.0/services/SMARTSENSE/metainfo.xml ambari-server restart } config_repo config_db install_ambari config_metainfo_modifycat repo/services/ACCUMULO/metainfo.xml

我们把这些组件选择隐藏了,默认不安装,不会出现在组件安装的选择项里面。

2.0 ACCUMULO true global.sh

####################### #部署相关全局参数定义 ####################### # root 密码保存 root_passwd=winner@001 # mysql配置 myurl=127.0.0.1 myuser=root mypwd=Winner001 myport=3306 mydb=ipvacloud # HDP包解压目录 install_path=/hadoop hdp_tar_name=HDP-WinDP-AIO-Platform-final.tar.gz # mysql 安装目录 mysql_install_path=/usr/local

init_db.sql

use mysql; GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'Winner001' WITH GRANT OPTION; create database ambari character set utf8; CREATE USER 'ambari'@'%' IDENTIFIED BY 'Winner001'; GRANT ALL PRIVILEGES ON *.* TO 'ambari'@'%'; FLUSH PRIVILEGES; create database hive character set utf8; CREATE USER 'hive'@'%' IDENTIFIED BY 'Winner001'; GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%'; FLUSH PRIVILEGES; create database ranger character set utf8; CREATE USER 'ranger'@'%' IDENTIFIED BY 'Winner001'; GRANT ALL PRIVILEGES ON *.* TO 'ranger'@'%'; FLUSH PRIVILEGES;

ambari.properties,ambari配置文件需要修改数据库连接和JDK路径

# # Copyright 2011 The Apache Software Foundation # # Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # #Sat Nov 04 12:10:44 CST 2023 agent.package.install.task.timeout=1800 agent.stack.retry.on_repo_unavailability=false agent.stack.retry.tries=5 agent.task.timeout=900 agent.threadpool.size.max=25 ambari-server.user=root ambari.python.wrap=ambari-python-wrap bootstrap.dir=/var/run/ambari-server/bootstrap bootstrap.script=/usr/lib/ambari-server/lib/ambari_server/bootstrap.py bootstrap.setup_agent.script=/usr/lib/ambari-server/lib/ambari_server/setupAgent.py check_database_skipped=false client.threadpool.size.max=25 common.services.path=/var/lib/ambari-server/resources/common-services custom.action.definitions=/var/lib/ambari-server/resources/custom_action_definitions custom.mysql.jdbc.name=mysql-connector-java.jar extensions.path=/var/lib/ambari-server/resources/extensions gpl.license.accepted=true http.cache-control=no-store http.charset=utf-8 http.pragma=no-cache http.strict-transport-security=max-age=31536000 http.x-content-type-options=nosniff http.x-frame-options=DENY http.x-xss-protection=1; mode=block java.home=/usr/java/jdk1.8.0_162 java.releases=jdk1.8 java.releases.ppc64le= jce.download.supported=true jdk.download.supported=true jdk1.8.desc=Oracle JDK 1.8 + Java Cryptography Extension (JCE) Policy Files 8 jdk1.8.dest-file=jdk-8u112-linux-x64.tar.gz jdk1.8.home=/usr/jdk64/ jdk1.8.jcpol-file=jce_policy-8.zip jdk1.8.jcpol-url=http://public-repo-1.hortonworks.com/ARTIFACTS/jce_policy-8.zip jdk1.8.re=(jdk.*)/jre jdk1.8.url=http://public-repo-1.hortonworks.com/ARTIFACTS/jdk-8u112-linux-x64.tar.gz kerberos.keytab.cache.dir=/var/lib/ambari-server/data/cache kerberos.operation.verify.kdc.trust=true metadata.path=/var/lib/ambari-server/resources/stacks mpacks.staging.path=/var/lib/ambari-server/resources/mpacks pid.dir=/var/run/ambari-server previous.custom.mysql.jdbc.name=mysql-connector-java.jar recommendations.artifacts.lifetime=1w recommendations.dir=/var/run/ambari-server/stack-recommendations resources.dir=/var/lib/ambari-server/resources rolling.upgrade.skip.packages.prefixes= security.server.disabled.ciphers=TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA384|TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA384|TLS_RSA_WITH_AES_256_CBC_SHA256|TLS_ECDH_ECDSA_WITH_AES_256_CBC_SHA384|TLS_ECDH_RSA_WITH_AES_256_CBC_SHA384|TLS_DHE_RSA_WITH_AES_256_CBC_SHA256|TLS_DHE_DSS_WITH_AES_256_CBC_SHA256|TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA|TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA|TLS_RSA_WITH_AES_256_CBC_SHA|TLS_ECDH_ECDSA_WITH_AES_256_CBC_SHA|TLS_ECDH_RSA_WITH_AES_256_CBC_SHA|TLS_DHE_RSA_WITH_AES_256_CBC_SHA|TLS_DHE_DSS_WITH_AES_256_CBC_SHA|TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256|TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256|TLS_RSA_WITH_AES_128_CBC_SHA256|TLS_ECDH_ECDSA_WITH_AES_128_CBC_SHA256|TLS_ECDH_RSA_WITH_AES_128_CBC_SHA256|TLS_DHE_RSA_WITH_AES_128_CBC_SHA256|TLS_DHE_DSS_WITH_AES_128_CBC_SHA256|TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA|TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA|TLS_RSA_WITH_AES_128_CBC_SHA|TLS_ECDH_ECDSA_WITH_AES_128_CBC_SHA|TLS_ECDH_RSA_WITH_AES_128_CBC_SHA|TLS_DHE_RSA_WITH_AES_128_CBC_SHA|TLS_DHE_DSS_WITH_AES_128_CBC_SHA|TLS_ECDHE_ECDSA_WITH_3DES_EDE_CBC_SHA|TLS_ECDHE_RSA_WITH_3DES_EDE_CBC_SHA|TLS_ECDH_ECDSA_WITH_3DES_EDE_CBC_SHA|TLS_ECDH_RSA_WITH_3DES_EDE_CBC_SHA|SSL_DHE_RSA_WITH_3DES_EDE_CBC_SHA|SSL_DHE_DSS_WITH_3DES_EDE_CBC_SHA|TLS_EMPTY_RENEGOTIATION_INFO_SCSV|TLS_DH_anon_WITH_AES_256_CBC_SHA256|TLS_ECDH_anon_WITH_AES_256_CBC_SHA|TLS_DH_anon_WITH_AES_256_CBC_SHA|TLS_DH_anon_WITH_AES_128_CBC_SHA256|TLS_ECDH_anon_WITH_AES_128_CBC_SHA|TLS_DH_anon_WITH_AES_128_CBC_SHA|TLS_ECDH_anon_WITH_3DES_EDE_CBC_SHA|SSL_DH_anon_WITH_3DES_EDE_CBC_SHA|SSL_RSA_WITH_DES_CBC_SHA|SSL_DHE_RSA_WITH_DES_CBC_SHA|SSL_DHE_DSS_WITH_DES_CBC_SHA|SSL_DH_anon_WITH_DES_CBC_SHA|SSL_RSA_EXPORT_WITH_DES40_CBC_SHA|SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA|SSL_DHE_DSS_EXPORT_WITH_DES40_CBC_SHA|SSL_DH_anon_EXPORT_WITH_DES40_CBC_SHA|TLS_RSA_WITH_NULL_SHA256|TLS_ECDHE_ECDSA_WITH_NULL_SHA|TLS_ECDHE_RSA_WITH_NULL_SHA|SSL_RSA_WITH_NULL_SHA|TLS_ECDH_ECDSA_WITH_NULL_SHA|TLS_ECDH_RSA_WITH_NULL_SHA|TLS_ECDH_anon_WITH_NULL_SHA|SSL_RSA_WITH_NULL_MD5|TLS_KRB5_WITH_3DES_EDE_CBC_SHA|TLS_KRB5_WITH_3DES_EDE_CBC_MD5|TLS_KRB5_WITH_DES_CBC_SHA|TLS_KRB5_WITH_DES_CBC_MD5|TLS_KRB5_EXPORT_WITH_DES_CBC_40_SHA|TLS_KRB5_EXPORT_WITH_DES_CBC_40_MD5 security.server.keys_dir=/var/lib/ambari-server/keys server.connection.max.idle.millis=900000 server.execution.scheduler.isClustered=false server.execution.scheduler.maxDbConnections=5 server.execution.scheduler.maxThreads=5 server.execution.scheduler.misfire.toleration.minutes=480 server.fqdn.service.url=http://169.254.169.254/latest/meta-data/public-hostname server.http.session.inactive_timeout=1800 server.jdbc.connection-pool=c3p0 server.jdbc.connection-pool.acquisition-size=5 server.jdbc.connection-pool.idle-test-interval=7200 server.jdbc.connection-pool.max-age=0 server.jdbc.connection-pool.max-idle-time=14400 server.jdbc.connection-pool.max-idle-time-excess=0 server.jdbc.database=mysql server.jdbc.database_name=ambari server.jdbc.driver=com.mysql.jdbc.Driver server.jdbc.driver.path=/usr/share/java/mysql-connector-java.jar server.jdbc.hostname=localhost server.jdbc.port=3306 server.jdbc.rca.driver=com.mysql.jdbc.Driver server.jdbc.rca.url=jdbc:mysql://localhost:3306/ambari server.jdbc.rca.user.name=ambari server.jdbc.rca.user.passwd=/etc/ambari-server/conf/password.dat server.jdbc.url=jdbc:mysql://localhost:3306/ambari server.jdbc.user.name=ambari server.jdbc.user.passwd=/etc/ambari-server/conf/password.dat server.os_family=redhat7 server.os_type=centos7 server.persistence.type=remote server.python.log.level=INFO server.python.log.name=ambari-server-command.log server.stages.parallel=true server.task.timeout=1200 server.tmp.dir=/var/lib/ambari-server/data/tmp server.version.file=/var/lib/ambari-server/resources/version shared.resources.dir=/usr/lib/ambari-server/lib/ambari_commons/resources skip.service.checks=false stack.java.home=/usr/java/jdk1.8.0_162 stackadvisor.script=/var/lib/ambari-server/resources/scripts/stack_advisor.py ulimit.open.files=65536 upgrade.parameter.convert.hive.tables.timeout=86400 upgrade.parameter.move.hive.tables.timeout=86400 user.inactivity.timeout.default=0 user.inactivity.timeout.role.readonly.default=0 views.ambari.request.connect.timeout.millis=30000 views.ambari.request.read.timeout.millis=45000 views.http.cache-control=no-store views.http.charset=utf-8 views.http.pragma=no-cache views.http.strict-transport-security=max-age=31536000 views.http.x-content-type-options=nosniff views.http.x-frame-options=SAMEORIGIN views.http.x-xss-protection=1; mode=block views.request.connect.timeout.millis=5000 views.request.read.timeout.millis=10000 views.skip.home-directory-check.file-system.list=wasb,adls,adl webapp.dir=/usr/lib/ambari-server/web

password.dat(数据库密码)

Winner001

my.cnf

[mysql] socket=/var/lib/mysql/mysql.sock # set mysql client default chararter default-character-set=utf8 [mysqld] socket=/var/lib/mysql/mysql.sock # set mysql server port port = 3306 # set mysql install base dir basedir=/usr/local/mysql # set the data store dir datadir=/usr/local/mysql/data # set the number of allow max connnection max_connections=200 # set server charactre default encoding character-set-server=utf8 # the storage engine default-storage-engine=INNODB lower_case_table_names=1 max_allowed_packet=16M explicit_defaults_for_timestamp=true [mysql.server] user=mysql basedir=/usr/local/mysql

krb5.conf (kerberos配置)

[libdefaults] renew_lifetime = 7d forwardable = true default_realm = WINNER.COM ticket_lifetime = 24h dns_lookup_realm = false dns_lookup_kdc = false default_ccache_name = /tmp/krb5cc_%{uid} #default_tgs_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5 #default_tkt_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5 [logging] default = FILE:/var/log/krb5kdc.log admin_server = FILE:/var/log/kadmind.log kdc = FILE:/var/log/krb5kdc.log [realms] WINNER.COM = { admin_server = windp-aio kdc = windp-aio }repo源

ambari.repo

#VERSION_NUMBER=2.7.4.0-118 [ambari-2.7.4.0] #json.url = http://public-repo-1.hortonworks.com/HDP/hdp_urlinfo.json name=ambari Version - ambari-2.7.4.0 baseurl=http://windp-aio/ambari/centos7/2.7.4.0-118/ gpgcheck=1 gpgkey=http://windp-aio/ambari/centos7/2.7.4.0-118/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins enabled=1 priority=1

hdp.repo

#VERSION_NUMBER=3.1.4.0-315 [HDP-3.1.4.0] name=HDP Version - HDP-3.1.4.0 baseurl=http://windp-aio/HDP/centos7/3.1.4.0-315 gpgcheck=1 gpgkey=http://windp-aio/HDP/centos7/3.1.4.0-315/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins enabled=1 priority=1 [HDP-UTILS-1.1.0.22] name=HDP-UTILS Version - HDP-UTILS-1.1.0.22 baseurl=http://windp-aio/HDP-UTILS/centos7/1.1.0.22 gpgcheck=1 gpgkey=http://windp-aio/HDP/centos7/3.1.4.0-315/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins enabled=1 priority=1

hdp.gpl.repo

#VERSION_NUMBER=3.1.4.0-315 [HDP-GPL-3.1.4.0] name=HDP-GPL Version - HDP-GPL-3.1.4.0 baseurl=http://windp-aio/HDP-GPL/centos7/3.1.4.0-315 gpgcheck=1 gpgkey=http://windp-aio/HDP-GPL/centos7/3.1.4.0-315/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins enabled=1 priority=1

总结:如上是单机测试环境,我们可以将集群扩展到多台 完成集群安装。

上一篇:深入了解百度爬虫工作原理